These days everything is either moving or already running in containers when it comes to software infrastructure. The very first container of Symflower was not for our product, some service or our website, it was our continuous integration (CI). Starting off with an almost tiny 100MB, we noticed that our baby Docker container image has grown to a full-size monster with a whopping 6.2GB. Here is a step-by-step guide on how you can reduce the size of your container images and how we slimmed down our oversized monster image.

As with everything in the world of software development and infrastructure, we can rely on best practices that others have already established by most likely wading through hours of painful research.

Use a smaller base image or libc

Every container image is based upon a so called base image (see the FROM instruction) that allows you to reuse configurations, packages and other files across different images. Base images allow to very quickly apply security and bugfix updates to your whole container based software infrastructure since a good base image serves as a general common installation. However, this generalization comes at the cost of including more libraries and tools that your application might not need. Choosing a smaller base image is therefore the first and probably the easiest way of reducing the size of your images.

Choose a small base image.

The most used base image, ubuntu, is already very optimized. However, the ubuntu image changed over time and it is worth upgrading. Here is a list of the latest images:

ubuntu:14.04, 197MBubuntu:16.04, 135MBubuntu:18.04, 63.2MBubuntu:20.04, 72.8MBubuntu:21.04, 80MBubuntu:22.04, 79MB

As you can see, an upgrade is not only worth the time because of better software but also because of the smaller image sizes. (Why the latest versions are a few MB bigger than 18.04 is left as an exercise for the reader. Surely the Ubuntu team is looking into that already.)

Another aspect of keeping your base image up-to-date is that you do not need to update as part of your image definition. Which saves a lot of space, because tracking file changes for updates, increases your image size dramatically. More about that later when we fully minimize our image layers.

However, if you want to make your base image even smaller, you might need to invest some time in making it compatible with another distribution or even with another libc implementation.

alpine:latest, 5.59MBbusybox:glibc, 4.79MBbusybox:musl, 1.43MBbusybox:uclibc, 1.43MB

For the very adventurous, one can also usescratch as a base image. Which has not only 0 MB (or in words ZERO MEGABYTES) but is also a no-op (a no-operation), i.e. it does not even add a layer to your image. If you do not want to go that far, there are base images specific to the application runtime you are using, e.g. for Go and Java, without adding any operating system specific files. One of these is called distroless by Google.

Looking at our monster CI container image

We are on ubuntu:20.04 which has only one Ubuntu competitor, namely 18.04, and we enjoy newer software more than shaving off a few measly MB. Switching to another libc implementation looks worth doing at first, but since our monster image has 6.2GB there are bigger fish to fry, at least for now.

Make the image context smaller

By default, Docker adds all files as the build context when doing a docker build. Hence, if you have a monorepo like us, your build context is HUGE. Such a big build context is usually a problem when you want to build your Docker images fast, as Docker needs to gather the context before actually building any layer of the image. However, there is also a common pitfall: when you add files to your image using the ADD or COPY instructions, you might add more than you actually need if you are adding directories.

Adding files one by one seems like the easiest way at first to make sure that you only add what you need. However, this does not help with your build context and it becomes tedious to maintain all these instructions. There might be an easier way for you.

Always use a .dockerignore file.

A .dockerignore file exactly defines which files are used as the build context. The default .dockerignore file we are using at Symflower starts with the following lines.

*

.*

These two lines instruct Docker to ignore every visible and hidden file and directory by default. After that we are adding exceptions that should be included. This allows us to exactly define which files should be part of the build context. For example, we might want to include all of our configuration files, so we include the whole configuration directory, but we only want some of our scripts.

*

.*

!bin/retry

!conf/ci/

!scripts/install-tool-go.sh

!scripts/install-tool-java.sh

With this .dockerignore file in place we could now add the directories and files we included one by one, or we could use just one COPY instruction to add them all.

COPY . /some/directory

Looking at our monster CI container image

Our CI container image is already optimized to the fullest: we have a very strict policy of only adding necessary files to our .dockerignore file, and we are already adding them in one COPY instruction to the image. No dice, let’s see what else we can do.

Fully minimize and tidy up image layers

A container image is defined by executing one image instruction after the other. Such instructions add files, some change the user or some other setting of the image, but most often, instructions actually run some command or full script. Every instruction creates a new layer in the image itself and can be cached and fully shared with other images. There are two problems that are of interest to us for reducing the size of an image: metadata and changed files.

A quick overview of your image layers can be queried with docker history $IMAGE.

Less layers, less metadata, smaller images (???)

Well, this might not even be worth wasting precious characters of this blog article, but when each byte counts, each layer counts. The metadata of an image layer contains information such as which command was used, operating system, history and changes in environment variables. All saved as JSON data. (The location on the file system depends on your Docker setup, we are using overlay2 as storage, which stores all of the metadata in /var/lib/docker/image/overlay2/imagedb/content/sha256/* with our setup.)

To give you an example for how “big” such metadata actually is. Our instruction ENV CODENAME focal to set the environment variable CODENAME to the value focal, which is the first instruction in our image layer, consists of 1.6KB, 1621 bytes to be exact. If you are desperate, reducing layers because of their metadata might be worth looking into. Looking at Symflower’s CI image of 6.2GB, such reductions are definitely not worth the time.

Less layers, less changes, smaller images!

An image layer mainly consists of file changes of exactly that layer. This fundamental property of container images helps to share files over multiple images: all layers that are the same are shared over images. So if you have the same base image and the same instructions for installing packages, you will share installing these packages over your images. Sharing is caring. However, with your image sizes in mind, you should rethink if you actually care.

Although image layers are shared, the actual images still include all layers. Hence, if you install packages for image A that are strictly speaking not necessary for image B, you could rethink if sharing layers is actually worth the size.

However, another much bigger problem of image layers saving file changes is that they consist of changes that might be made irrelevant by the next layer. If you are overwriting the same file or even copying a file instead of moving, you have file changes that are strictly speaking not necessary for your final container image. Let’s look at an example.

FROM ubuntu

RUN head -c 10M </dev/urandom > /some-file

RUN head -c 10M </dev/urandom > /some-file

RUN head -c 10M </dev/urandom > /some-file

RUN head -c 10M </dev/urandom > /some-file

RUN head -c 10M </dev/urandom > /some-file

This image definition overwrites the same file 4 times. Hence, only the content of the fifth instruction is actually usable by the final container image. Build it (e.g. with docker build -t dockerdemo:latest) and take a look at the size with docker image. With this command you only see the final image size, but you do not see the individual layers.

Another command exists (later we will learn an even better one) that provides the size of the individual image layers: docker history $image (e.g. docker history dockerdemo:latest). Let’s look at the output.

docker history dockerdemo:latest

IMAGE CREATED CREATED BY SIZE COMMENT

e1aaec920c02 1 minutes ago /bin/sh -c head -c 10M </dev/urandom > /some… 10.5MB

4b43bd32d4cb 1 minutes ago /bin/sh -c head -c 10M </dev/urandom > /some… 10.5MB

bb84520dd202 1 minutes ago /bin/sh -c head -c 10M </dev/urandom > /some… 10.5MB

3b0fa6820eb5 1 minutes ago /bin/sh -c head -c 10M </dev/urandom > /some… 10.5MB

212ffe2da361 1 minutes ago /bin/sh -c head -c 10M </dev/urandom > /some… 10.5MB

54c9d81cbb44 5 weeks ago /bin/sh -c #(nop) CMD ["bash"] 0B

<missing> 5 weeks ago /bin/sh -c #(nop) ADD file:3ccf747d646089ed7… 72.8MB

As you can see, we have 5 layers of about 10MB. This example seems impractical at first, but overwriting files happens all the time. Best example is installing packages where you are overwriting the same package database files and the same cache files. So how can we get rid of such unnecessary file changes? We can combine instructions, i.e. in our case we can either create a script with all the commands and execute that script, or we can combine all commands into one big command.

FROM ubuntu

RUN head -c 10M </dev/urandom > /some-file \

&& head -c 10M </dev/urandom > /some-file \

&& head -c 10M </dev/urandom > /some-file \

&& head -c 10M </dev/urandom > /some-file \

&& head -c 10M </dev/urandom > /some-file

This gives us the following image layers, which shows that we now store only one file change instead of 5.

IMAGE CREATED CREATED BY SIZE COMMENT

c814ceb72748 1 second ago /bin/sh -c head -c 10M </dev/urandom > /some… 10.5MB

54c9d81cbb44 5 weeks ago /bin/sh -c #(nop) CMD ["bash"] 0B

<missing> 5 weeks ago /bin/sh -c #(nop) ADD file:3ccf747d646089ed7… 72.8MB

Even though we made our instructions harder to maintain, in the end, we want to make our images smaller in size and that is what we did. “Hold on”, you say, “what if I actually want to share layers and what if overwriting file changes is actually part of getting the final result?” If you are only interested in the result of your layers, e.g. because you are building inside your image definition, you are in luck. Docker allows for multi-stage builds which can be used to forward just the results. Hence, you can install and build in separate image layers that can be shared, but only use the result to make up the actual container image.

If you do not care about caching image layers you can go even one step further: squashing all layers into one. There are tools such as docker-squash or even the –squash build argument for Docker. However, both have limitations and disadvantages, and can only reduce the image size if there are actual file changes that are unnecessary. Those are investigated in the next section.

Less files, less changes, smaller images!

Now that we know that file changes are bad, we can think about which file changes we can get rid of.

Can we remove files that we need for running the image? “NO!" Sorry I asked.

We can remove files that are in the end not used. However, remember an image layer only saves changes of its own layer. Hence, we only have less file changes if they happen in that very layer. One of the best examples for such unnecessary changes are cached files, e.g. if you are installing packages with apt-get (or any other package manager) there will be caches for fetching repository metadata.

The following example installs curl via apt-get:

FROM ubuntu

RUN apt-get update \

&& apt-get install -y curl

This will update the repository metadata and maybe even other packages that are deemed as important byapt-get but are actually unnecessary. How can we find out which files can be removed? We could export the file system of each layer and look at the differences. Gladly someone else already took the time to write a tool for finding file differences. It is called dive and we can run it on images using dive $image (e.g. dive dockerdemo:latest). After a while we see lots of information about our image because THIS TOOL IS AMAZING. Let’s take a look.

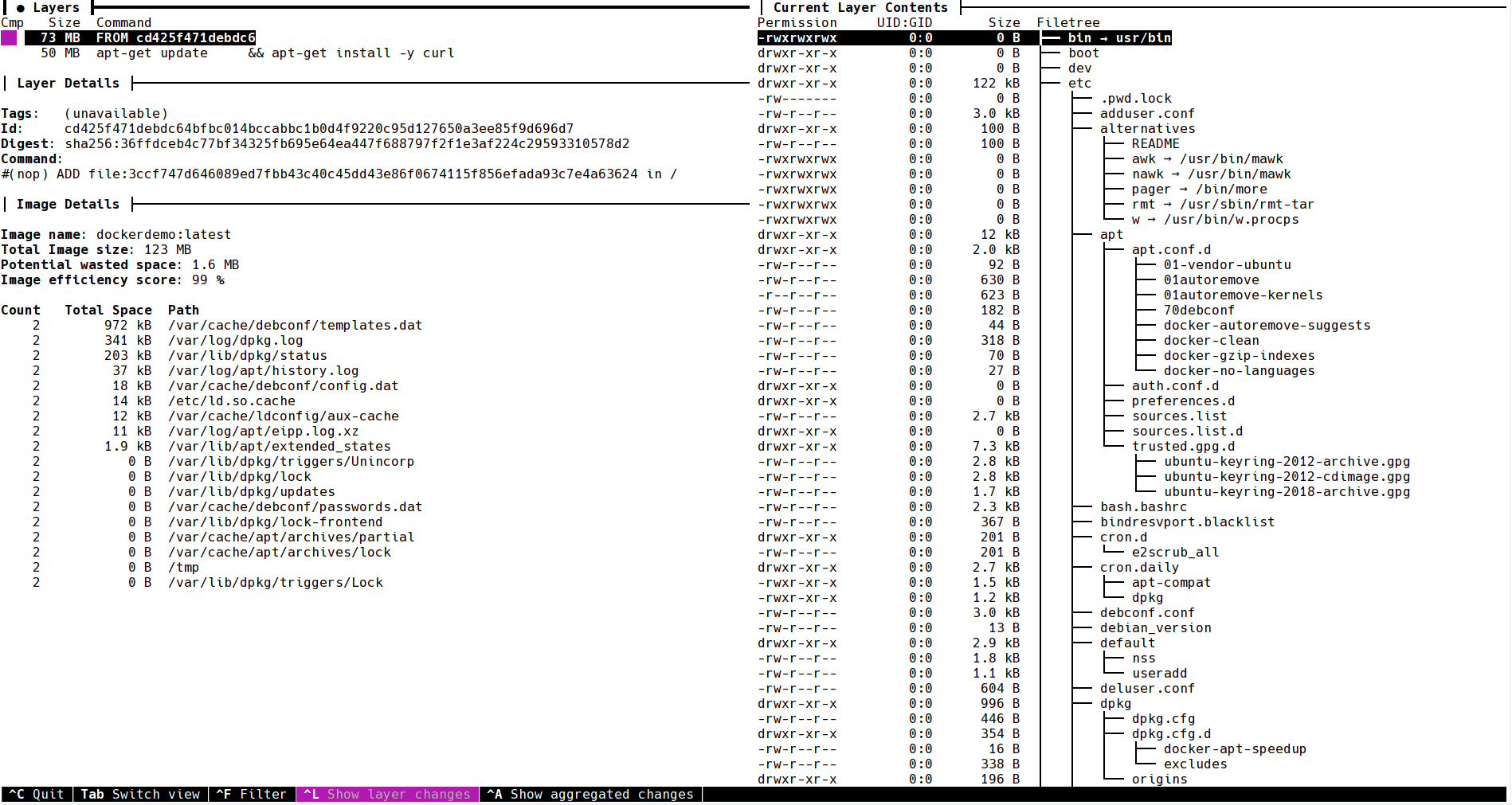

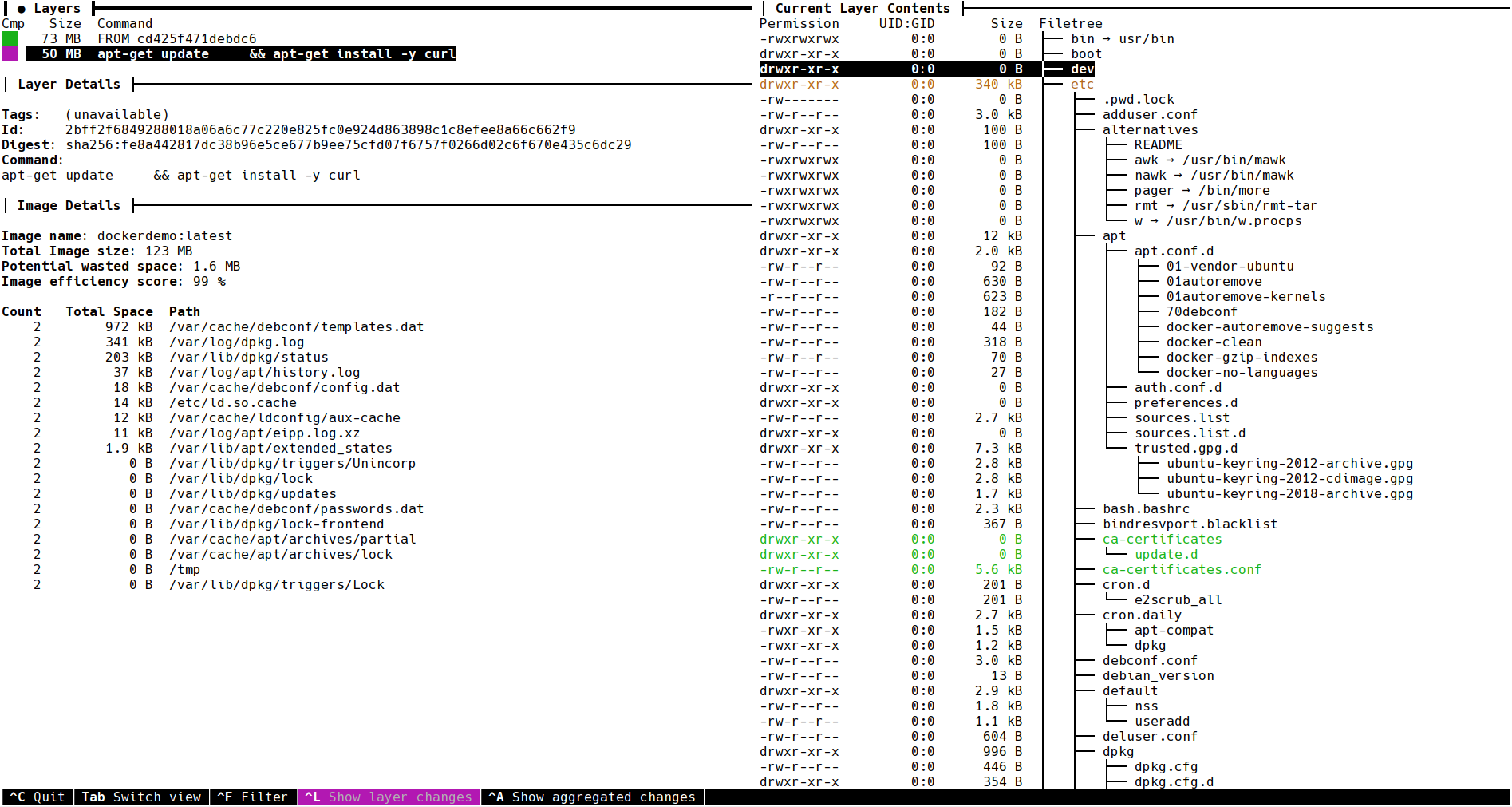

We can see the command and size of each layer in the top-left corner. As well as general information of our container image in the bottom-right corner. There you can see the total image size is 123MB and we have 1.6MB potential wasted space which gives us an efficiency score of 99%. The list below shows us the cause of the wasted space: we have files that are overwritten. So instead of manually finding out which files got overwritten and how much space those previous changes waste, we have dive for doing that for us. However, 1.6MB might not be worth looking into. Let’s take a look at the right-hand side.

On the right you can see the directories and files of the current layer with their permissions, user and group, but especially with their size. Directory sizes are accumulated with all the files that they contain, which allows us to find the files that waste the most space very fast. Using the cursor keys of your keyboard (up and down) you can switch between the different layers. Changing to our installing layer shows another cool feature of dive:

As you can see, dive is also color-coding which directories and files actually change. This allows us to move even faster in finding which files we could remove. Let’s take a look by switching with TAB to the right side and pressing CTRL+SPACE which collapses all directories, with SPACE you can open individual directories.

With this tooling, we find out that apt-get is saving metadata that we do not need. This includes recommended packages and even documentation that might not be needed. Putting that information together we can, for example, use the following new image definition:

FROM ubuntu

RUN apt-get update \

&& apt-get install -y --no-install-recommends curl \

&& apt-get autoremove -y \

&& apt-get purge -y --auto-remove \

&& rm -rf /var/lib/apt/lists/*

This already yields a reduction by 36MB, which results in an image size of 87MB. Can we go even further? Yes, but that is left as an exercise for the reader, e.g. we do not need documentation in the image.

Looking at our monster CI container image

Let’s look at how many image layers our monster CI container image has, and how big they are:

docker history registry.symflower.com/symflower-testing/runner:dev

IMAGE CREATED CREATED BY SIZE COMMENT

9abb9699218e 19 hours ago /bin/sh -c #(nop) USER service 0B

c8be490e64df 19 hours ago /bin/sh -c CI_PROJECT_DIR=/home/vagrant/symf… 6.09GB

d399bd8871a6 19 hours ago /bin/sh -c #(nop) COPY file:5ed0995fb22221b2… 3.05kB

fd9194a7d21d 19 hours ago /bin/sh -c #(nop) COPY dir:19f4efb68a3bfa984… 6.45MB

7f394a262862 19 hours ago /bin/sh -c #(nop) ENV CI_PROJECT_DIR=/home/… 0B

0ed66b1641f7 19 hours ago /bin/sh -c #(nop) ARG CI_PROJECT_DIR 0B

37a6c13ea680 19 hours ago /bin/sh -c #(nop) ENV KUBERNETES_VERSION=1.… 0B

bfeba0feaa97 19 hours ago /bin/sh -c #(nop) ENV DOCKER_VERSION=20.10 0B

8e0663221048 19 hours ago /bin/sh -c #(nop) ENV CODENAME=focal 0B

54c9d81cbb44 5 weeks ago /bin/sh -c #(nop) CMD ["bash"] 0B

<missing> 5 weeks ago /bin/sh -c #(nop) ADD file:3ccf747d646089ed7… 72.8MB

To reduce the number of layers, we could move the 3 ENV instructions into an existing Shell script, and copy that file with the COPY dir instruction. Additionally, we can merge the COPY file instruction into the COPY dir instruction. However, removing these 4 instructions will only bring us some KBs of savings. That’s not worth the effort. What is the bulk of this image? Clearly the 6.09GB image layer which already screams that there must be something wrong.

Since we cannot reduce layers anymore, we need of course think about the actual file changes. However, it is important to mention the purpose of this container image: It is meant for our continuous integration (CI) where we build artifacts, e.g. binaries, images and documentation, test source code and artifacts, and deploy artifacts and configurations so one can enjoy the latest Symflower releases, or, you know, fix a bug or two. Most of the work is not done inside the image definition, but within the CI pipelines while actually running the image as containers. Additionally, this image deals with the whole monorepo, including every single component of our CLI product and editor extensions. Moreover, we have tools installed for monitoring, logging and especially debugging to keep track and investigate every problem that can come up. Optimizing this container image will be very hard.

First, let’s see if ignoring recommended or suggested packages makes a difference for us. For this to happen, either every apt-get install has to receive a --no-install-recommends, or we add the following lines to /etc/apt/apt.conf or in a file in /etc/apt/apt.conf.d to implement it globally:

APT::Install-Suggests "0"

APT::Install-Recommends "0";

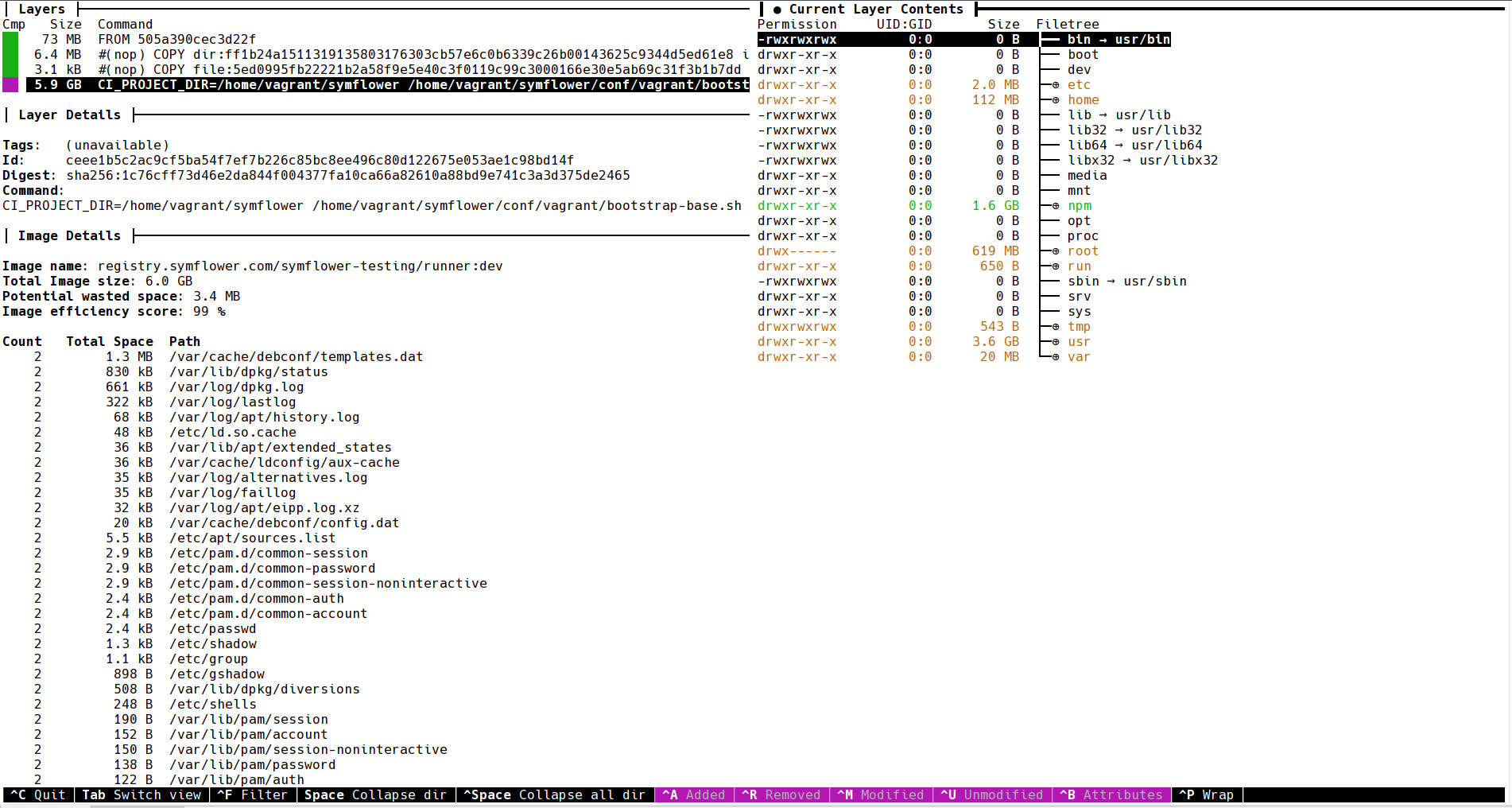

We went with the /etc/apt/apt.conf solution, and this immediately made a difference. That is, we obviously had multiple apt-get install commands without the --no-install-recommends option. The monster CI image went down from 6.09GB to 5.9GB, a saving of about 190MB. Let’s see what else we can find by asking dive for a better overview.

As you can see we do not copy a lot of files to our image, 6.4MB + 3.1KB, because our CI image is mostly about installing software through scripts. Remember, the actual artifacts are only created in the CI pipelines themselves.

We could also try reducing our potential wasted space, i.e. files that have been overwritten. However, 3.4MB seems not worth the effort. Let’s take a closer look at the actual file changes.

There are about 6GB left. Taking a deeper look at the actual size (docker history –human=false $image) we can see that the image layer is “only” 5912MB big. For reducing the size further we need to take a look at each individual directory and file. We start with the biggest changes, something in /usr, /npm, /root/ and /home. We might make some progress there.

The first thing that might catch one’s attention is that we are switching to the user service and have a whopping 619MB in the directory /root. Looking at these files, we saw that it is mainly the cache for the Cypress download. That directory is not available for the user service and since we do not run any background services in our container image, there is also no process that could access these files. Let’s get rid of the whole /root directory by simply removing it at the end of the layer’s script. A saving of 619MB.

Looking at the biggest directory /usr, we saw that 408MB are used by the Go installation, 101MB by the NodeJS installation, 527MB by LLVM, 418MB by Java and 202MB by Chromium. In total 1656MB that cannot be reduced any further. This problem continues with other parts of our container image: we see files that we can remove, e.g. documentation, but in the end most files are necessary to build or run certain jobs of our CI. We hit the point, where going forward means painstakingly questioning every package and file we have in our container image.

We have reduced our monster CI container image by 809MB just by taking a quick glimpse at the added files of our biggest layer. For now, this is enough for us. However, before we sum up, we still have an almost obvious solution left to discuss for making a container image smaller.

Splitting up processes and services for breaking up images

In the previous section we explained that our monster CI container image includes everything that we need to handle our whole CI pipeline. However, we never questioned that axiom. We always enjoyed working with this principle because it allowed us to keep our development environment, CI, deployment and debugging tools for all environments in sync without any additional effort. We are building and testing a range of different CI jobs with the same image, even though they are using different languages, libraries and tooling.

If you came to the same conclusion as we did with your container images, it might be time to simply split up your processes and services. This makes building and maintaining your environments and images harder, but gives you an isolation for each image that you can never reach with just one global image. The benefit is clearly that every image can be fine-tuned to the corresponding task or service, e.g. only packages need to be installed which are really necessary.

Takeaways

Slimming down container images is easy when you only have one service in mind that does not need any operating system or debug tooling. Otherwise you need to take a very hard look at every best practice and at the very end, at each individual file change.

Reducing our monster CI container image resulted in a reduction of 0.9GB to a still big image with a size of 5.3GB. However, going forward would either mean painstakingly going over each installed package and file change, splitting up our CI image for each individual CI job and splitting up our CI jobs to allow for even smaller images.