Inadequate software testing has many drawbacks such as the risk of bugs and errors, increased development time, and growing technical debt – all covered in previous parts of this series. In this last part of our series on the costs of missing tests, we’re focusing on the reduced (developer, user, and market) confidence that inadequate or insufficient software testing can lead to.

How does subpar testing lead to reduced confidence?

In the context of software development, confidence can mean:

- Confidence for the developer that they can rely on already deployed code when adding new features in subsequent releases

- Confidence for the user (actual or potential users, e.g. the market) that the application is stable and will perform reliably and as expected, every time.

In general, confidence stems from actual, experiential proof that the application works as intended under all circumstances, that is, with all reasonably expected inputs, in every environment it will be used in. All that, of course, can only be achieved if rigorous testing is applied on different levels throughout development.

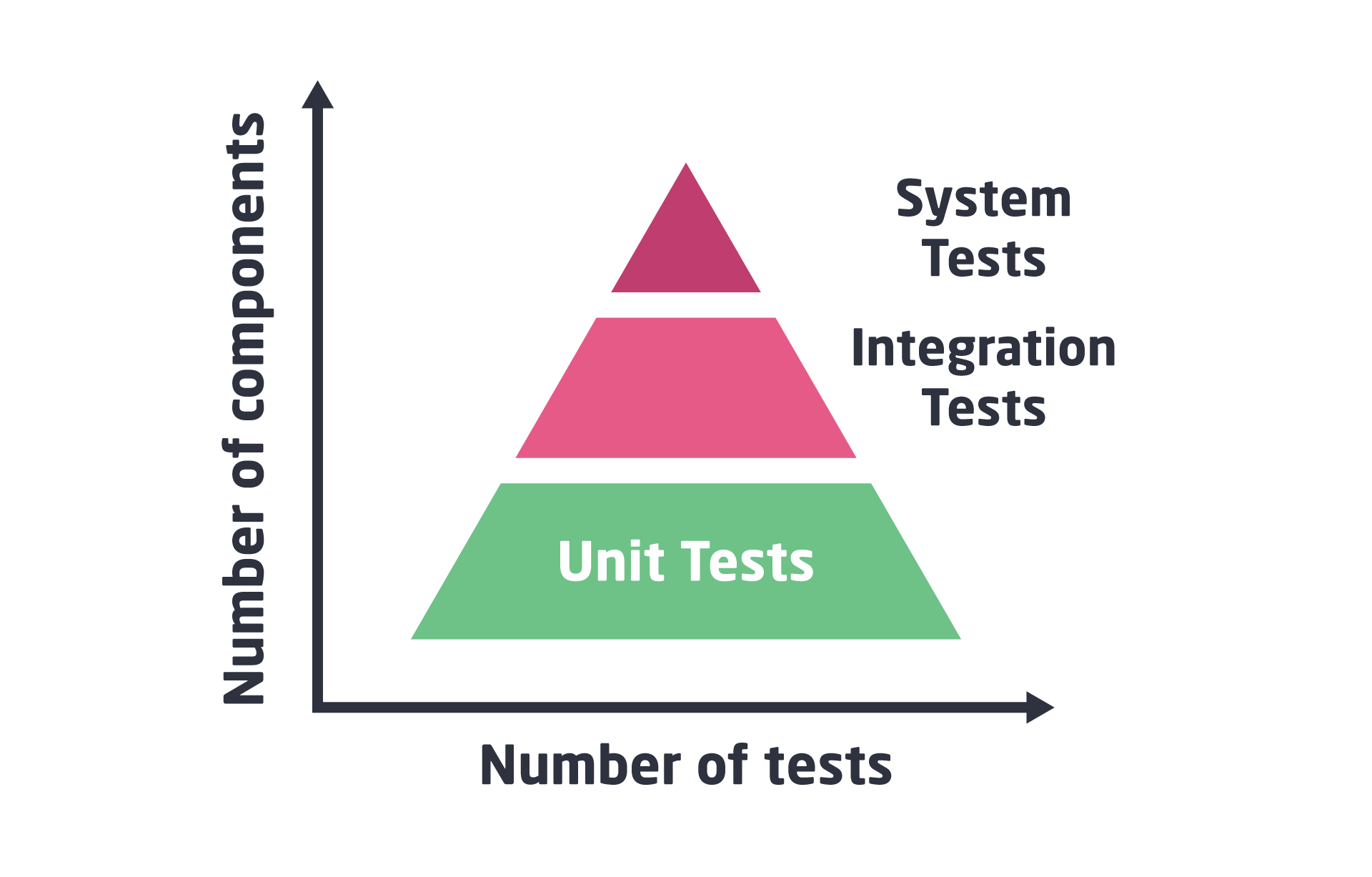

To achieve confidence in your application, you’ll need to apply various types as well as levels of testing. Learn more about the different levels and types of testing in our previous posts. You’ll need to keep in mind the different code coverage types, also considering that coverage doesn’t warrant good quality. Techniques like mutation testing and other techniques can be relied on to further bolster the quality of your software applications.

Adequate testing includes unit testing and appropriate regression testing. Without these, developers are always going to have a level of uncertainty when refactoring code or adding new functionality. This reduced confidence can go as far as a developer not knowing if it is safe to change a line of code. In old legacy systems with a bad architecture, a developer may be wary of making changes for fear of breaking something. Not only is that risky, it also makes it less enjoyable to work on that code base.

While a piece of software could certainly work even without achieving the necessary levels of confidence, there’s some chance it may not work – and it is exactly that conditional mode that confidence is all about.

A lack of confidence can be annoying in the case of, say, a yoga app on your phone. But it can also be dangerous in the context of safety-critical applications. A good example of this is the recent recall of the Full Self-Driving feature in Tesla’s various models.

How Tesla’s safety risks reduced confidence and led to recalls

Tesla is the first car developer to offer what they call a “Full Self-Driving” (FSD) feature in their cars – which, of course, is not fully self-driving, but gets closer to it than most other solutions on the market. More than 360,000 vehicles have been equipped with the feature, which customers paid $15,000 to access. But due to safety risks, the National Highway Traffic Safety Administration (NHTSA) required Tesla to recall all their cars with this Full Self-Driving feature.

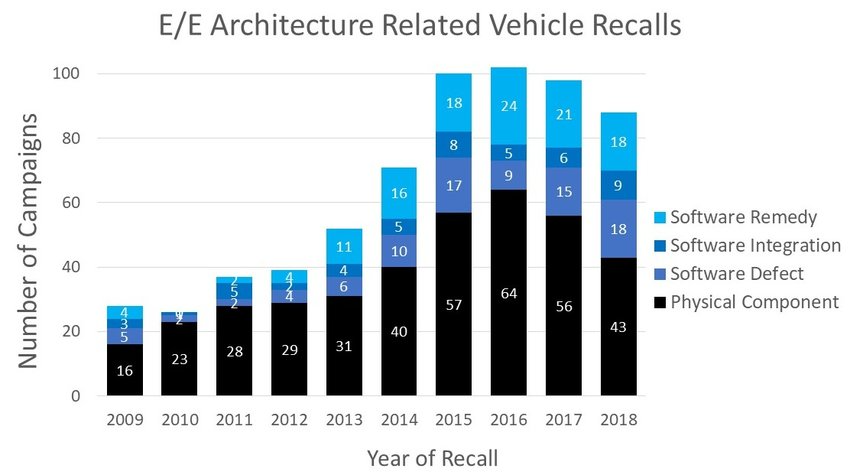

Tesla, of course, is not the only carmaker affected. Automotive recalls have been on the rise for years, and software faults are becoming a key reason. Yet Tesla’s Full Self-Driving failure is a good example that lets us analyze how appropriate software testing plays a role.

The NHTSA claims that Teslas’s Full Self-Driving feature may lead to the vehicle’s behavior becoming unsafe around intersections. That results from insufficient testing, even despite Tesla’s advanced “shadow testing” concept. In Tesla’s practice, “shadow mode testing” means that the car is driven by a human driver and the self-driving algorithm does not interfere in any way. It does, however, record the journey and makes driving decisions – then, it compares those driving decisions to the actual decisions made by the human driver. That is intended to help the algorithm learn, improve, and better mimic the behavior of human drivers. As impressive as shadow mode testing is, it wasn’t sufficient to ensure the safety of Teslas with their Full Self-Driving feature.

The Administration has identified over 270 crashes in the nine months preceding June 2022 involving any of Tesla’s driver assistance systems. In at least one known case, the FSD feature caused 8 cars to collide by braking unexpectedly.

When talking about confidence in a piece of safety-critical software, “unexpected” is something like a swear word. That’s exactly why this recall has impacted customers' confidence in Teslas, and it may be why some Tesla owners reportedly think that the Full Self-Driving feature wasn’t worth their money.