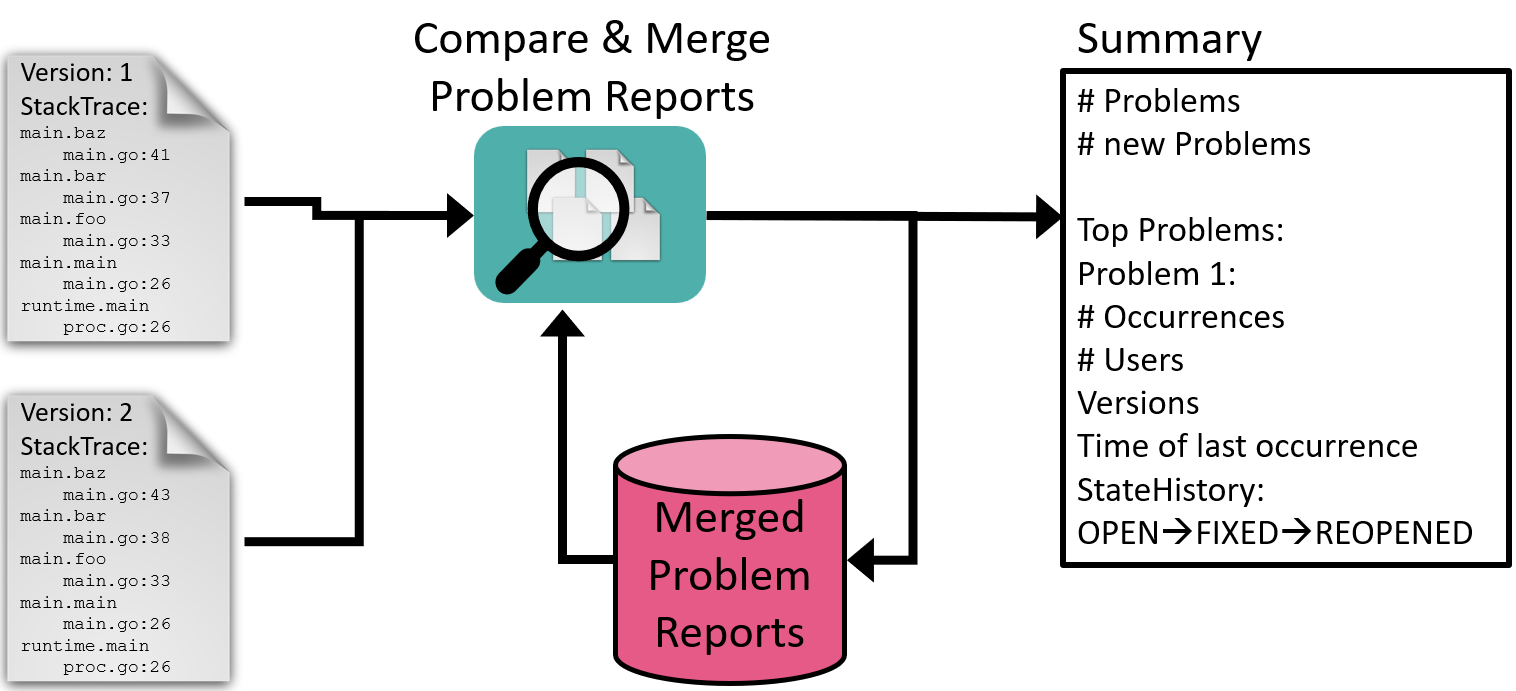

This blog post showcases Symflower’s automatic error reporting process, which involves a novel approach to categorizing huge amounts of software problems by combining stack traces and code diffs. This approach allows us to identify whether problems based on different code revisions (two different commits in your repository) actually represent the same problem. This lets us reduce hundreds of thousands of problems to only a few hundred - which we then automatically prioritize to reduce time spent and enhance debugging efficiency.

The solution outlined in this blog post covers all aspects from tracking a problem to prioritizing its fix:

- Collecting telemetry data to identify problems

- Sophisticated stack trace comparison that works across different code revisions

- Deduplication: categorizing (merging) problem reports to remove duplicates

- Automate prioritization to work only on important problems

- Streamline information to facilitate debugging

Collecting telemetry data

To swiftly identify and resolve problems before aggravating or even losing users, it’s crucial to monitor the execution of software and to gather relevant data. At Symflower, we’ve embraced this practice by collecting telemetry data to identify the problems encountered by our users. However, it’s paramount that we respect the privacy of our users and ensure that sensitive information like code and configuration snippets remain absolutely confidential. The only metadata transmitted in our problem reports are:

- Symflower version: The version of the tool where the problem occurred.

- Machine identifier: An anonymous identifier for the machine executing the tool.

- Timestamp: Timestamp of the reporting of the problem.

- Environment: The context in which the tool was executed (e.g. CLI, VSCode, IntelliJ, etc).

- Message: The error message of the encountered problem (e.g. “a language AST node is unknown”).

- Error data: Optional additional data attached to the error for debugging purposes, (e.g. the name of the unknown language AST node).

- Stack trace: The stack trace of the Symflower code that led to the problem.

Research on bug reports highlights the importance of including stack traces as they significantly reduce the time spent reproducing and debugging problems. However, stack traces do have one huge disadvantage: different code revisions have different stack traces that cannot be directly compared even for the same problem. In the next section, we are solving this hurdle.

Dealing with large volumes of software problems

Managing lots of problem reports poses a significant challenge for two key reasons: It is difficult to prioritize which problems should be addressed first. It is challenging to identify duplicates where the same problem occurred for different users and code revisions. To tackle these challenges, we first apply a sophisticated analysis approach centered around stack trace comparison.

How to compare stack traces? (A novel approach)

The problem with comparing stack traces from different code revisions is that we cannot rely on the line numbers of the called code, as they likely have shifted due to edits.

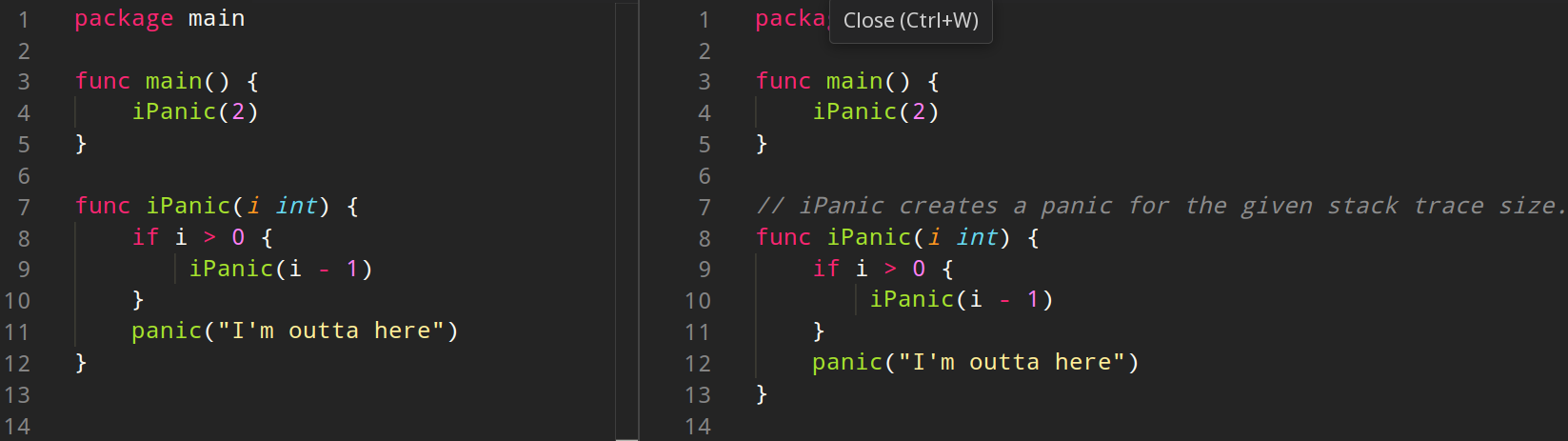

Consider this scenario: by simply adding a comment to describe a function, the lines of code within the function have shifted. Although the “panic” is triggered by the same function call, executing this code produces slightly different stack traces:

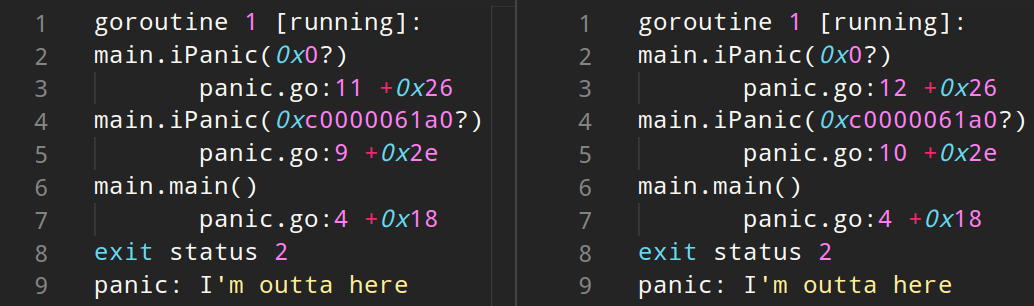

These stack traces are different due to the line shift in the code. Similar differences can arise in stack traces from different software revisions if code was inserted or removed above function calls that generate a stack trace. Even though the underlying problem remains unchanged:

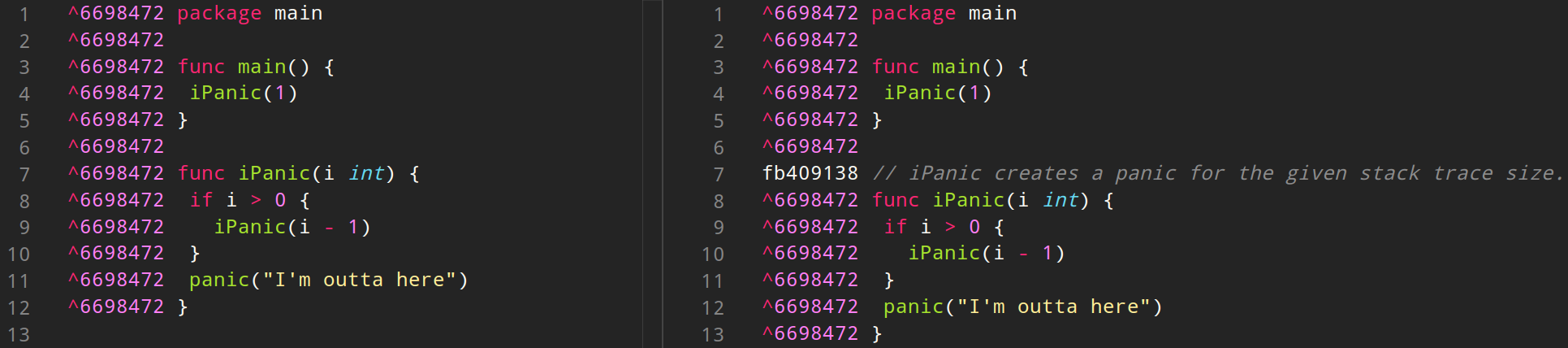

That results in redundancy. To deduplicate the results, we analyze the Git repository where the code is developed. If the lines of code responsible for generating stack traces remain unchanged between two versions, despite apparent shifts in the source code files, we infer that the stack traces likely stem from the same underlying problem. Leveraging the standard git blame functionality on the files from both versions, we pinpoint the commit revisions responsible for altering specific code lines:

If the referred code lines in the stack trace have been changed by the same commit revisions, we assume the stack traces are in fact equal, thus achieving deduplication.

Limitations:

- We could make the wrong assumption about two problems being the same if the control flow above a call has changed, but the call itself was not touched by a commit. Code might have been introduced between two versions that changes the behavior of some functions, but leads to similar stack traces for different problems.

- If identical function calls occur multiple times within the same function with matching commit revision numbers, stack traces will appear equal but may denote different problems.

Despite these limitations, this approach lets us significantly improve the efficiency of resolving faults: instantly reducing hundreds of thousands of problems to just a few hundred.

Tracking problems

Through automated daily analyses, we merge problem reports to create representatives for all occurrences.

For each of these problem representatives we store the following data in the merged report:

- Checksum: To identify each problem a unique identifier is generated when it first is recorded.

- Stack trace: The stack trace encapsulating the sequence of events leading to the reported issue, obtained from the latest Symflower version it occurred in.

- Stack trace version: The version identifier associated with the stored stack trace, enabling further comparisons.

- Messages: Messages accompanying the problem reports, providing context and insights into the nature of the issues encountered.

- Error data: A collection of the error data extracted from the problem reports, aiding in debugging and resolution efforts.

- Environments: An aggregation of the environments in which the errors manifested, along with the respective occurrence frequencies for each environment.

- Versions and machines: A mapping of the Symflower versions wherein the problem occurred, linked to unique machine identifiers, along with the frequency of occurrences on each machine.

- Last occurrence: A timestamp indicating the most recent instance of the problem being reported.

- State history: A chronological record detailing the lifecycle states traversed by the problem, by storing each state object over the entire history.

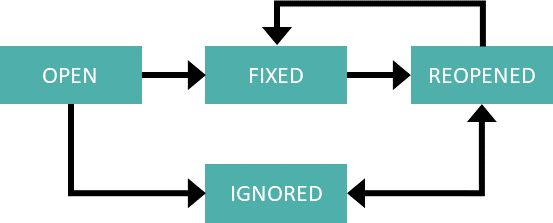

The problem representatives follow a lifecycle similar to those found in bug reporting tools, but automated for 100% efficiency:

- OPEN: Indicates reported problems that have not yet been addressed. Issues addressing the problem are referenced via issue numbers from GitLab.

- FIXED: Once a developer resolves the problem, it transitions to the fixed state. Here, we also record the Symflower version in which the problem was resolved and the Git commit revision numbers associated with the fixes. This additional information helps track (trace) the changes made to address the problem.

- REOPENED: In cases where a previously fixed problem resurfaces in a newer version, our analysis automatically reopens the issue for further attention. Similar to the OPEN state, we maintain a list of issue numbers for reference.

- IGNORED: Problems that cannot currently be resolved are marked with this state to avoid wasting time on known but unimportant problems. Issues with dependencies to pending features on our development roadmap also get this designation, and are filtered for subsequent analysis. For this state, we store a string explaining why the problem was marked as ignored.

So far, we discussed how we identify unique problems from a pool of reported occurrences and how we track their state. We use this data to support our developers by prioritizing the problems by:

- the number of users that have encountered them,

- the number of overall occurrences,

- and when these problems last happened.

To relay these insights effectively, we generate detailed summaries from our analysis. These summaries include:

- The total count of unique problems identified, offering a comprehensive overview of the current error landscape.

- A breakdown of the top 10 prioritized problems, highlighting those most frequently encountered and impacting users.

- Identification of new problems that have surfaced since the last analysis, shedding light on emerging challenges.

- Highlighting reopened problems, indicating instances where previously fixed issues have resurfaced, ensuring continuous vigilance and proactive problem-solving.

Through these measures, we provide our developers with actionable insights and enable them to focus their efforts to promptly address critical issues:

- Problems that have been occurring more frequently by many users are automatically marked as important. This helps to fix emerging problems right away before they become broader annoyances in our user base.

- Problems that have been fixed and occur again with a newer version are obviously not fully fixed. These problems are automatically marked with a higher priority as this category of bugs tends to be most annoying for the user.

- Problems that occur less often but for many users are prioritized over problems that occur very often but only for a few users. This helps to make sure that common problems are fixed first, before specific ones that might only apply to a handful of users.

- Problems that used to be frequent but haven’t recently occurred can be considered “fixed”, despite not having been explicitly addressed. This helps the team focus on real, up-to-date problems.

Facilitating problem resolution

We streamline the process of addressing and fixing problems by automating key aspects. When developers opt to tackle a problem, they adhere to the following automated workflow:

- Generate Issue with comprehensive data: A summary of the problem representative is automatically compiled to serve as the problem’s documentation. We use this summary to create a GitLab issue that explains the problem and its context. The associated issue number is referenced in the problem representative.

- Fixing the problem: Developers use the provided data to reproduce the problem, create a test case for thorough validation, and fix the problem. Our automated testing system tracks a number of open-source repositories to find reproducers for error reports. This helps us understand problems while keeping our users' data private on their machines.

- Resolving the problem: “Fixed” tags help streamline the tracking and referencing of fixes. We automatically check commit messages for this tag, and update the state history of the problem representative accordingly, marking the problem as FIXED. The commit numbers associated with the fix are logged, referencing the fix to support developers if the same problem ever occurs again. The Symflower version is also extracted from the version tags made in Git and stored in the FIXED state object.

Outlook: further improving categorization and automation

The described categorization logic and automation already make a huge difference in the number of problems a small team can work on. Also, automating the prioritization of problems gave the Symflower team a great tool to make sure that we are only working on what really matters to make our users happy. Hence, we highly recommend that you apply a similar approach in your project.

However, there is still room for improvement as the comparison of stack traces is not working perfectly. In larger projects, it can happen that a single problem is identified and prioritized as two separate problems. Finding reproducers also tends to be difficult due to the high privacy limitations we set for ourselves. Reducing reproducers to the essential test case remains a labor-intensive manual task.

Our team is actively working on resolving all of the above challenges. If you are interested in our solutions, let us know!

We hope you liked this article, and would be grateful for your feedback. Especially if you find a mistake or see room for improvement, drop us a line!