In this article we are looking at an evaluation of 123 LLMs on how well they can write automated tests for an empty Go function. What abilities do these LLMs already have? Which abilities do they need to evolve? And, yes, you will also see some funny and weird LLM creations. 😉

Why evaluate an empty function?

We asked LLMs to generate automated tests to cover the following source code:

package plain

func plain() {

return // This does not do anything but it gives us a line to cover.

}

At first it seems weird or even useless to evaluate the generation of automated tests for such an empty function. After all, this code does nothing. So why test it? To quote the result of the LLM koboldai/psyfighter-13b-2: “Good job on writing useless but necessary tests though.” The question is not “if we should generate tests”, but “can we actually generate tests” for the easiest code possible in Go. Ultimately, if a tool does not generate valid test code for the easiest testing task possible, it does not understand what it is doing at all. Would you then trust the generated test code for more complex scenarios?

To be fair, generating test code is not easy. Trust me, we know. This task involves multiple major hurdles that an LLM needs to master:

- Understand the statements for the task’s input

- Understand the statements for the task’s output

- Understand how to read and write Go source code

- Understand how to write Go tests

- Understand the context of packages, import paths, test function names, visibility, calls, parameters, return arguments, …

- Understand how many and especially what tests to generate

- Generate consistently

- Generate deterministically

The ability to solve each of these subtasks determines if the resulting source code is useful or not. If a tool is useful or not. Which also determines what this evaluation is about: are the evaluated LLMs useful for generating test code for Go? And how can they be improved?

For this purpose we introduced a new evaluation benchmark DevQualityEval which we are evolving step by step to help developers of LLMs and tools that use LLMs to improve the quality of generated source code.

Prompt engineering is hard

LLMs have one common user interface: a prompt. Everyone that has ever tried a tool like ChatGPT and wasn’t satisfied with the answer knows all too well that Prompt Engineering is a real thing, and it is hard. There are whole websites like the Prompt Engineering Guide that explain different methods, how to activate abilities of certain models and how to define context. In other words, how to define questions in a better machine-understandable way. However, as it turns out, not all features are implemented by all models. Also, often just a single word can make a huge difference in the quality of the answer of a model.

In the end we landed on the following query for the time being:

Given the following Go code file “plain.go” with package “plain”, provide a test file for this code. The tests should produce 100 percent code coverage and must compile. The response must contain only the test code and nothing else.

package plain func plain() { return // This does not do anything but it gives us a line to cover. }

Let’s go through some findings right away:

- Defining the programming language upfront helped to always generate Go code. We were actually surprised how many models did understand Go given that most LLMs and benchmark talk about Python.

- Stating the name for the file confused some models, e.g. one included the file name in the package name for the test. Confused models are fine, it shows lack of context and room for improvement. Since it is not a special annotation but natural language, we kept the file name.

- Naming almost everything “plain” confused some models, e.g. the test name had multiple “plain” for some models. Given that each mention of “plain” must be understood in the right context, we more easily identified which LLMs did not have certain context abilities.

- “Provide a test file” and not just “generate tests” led to complete test files. The programming language Go requires a “package” statement and imports for testing which were otherwise often left out.

- Connecting “test file” with “tests”, e.g. by writing “The tests of the test file” was not helping. Most models knew that tests should be generated.

- “100 percent” performed better than “100%”. Some models took the “100%” and created special code that gave “100” or “%” meaning, e.g. extra output, and especially extra tests. Most commonly, the function was called 100 times, which still happens with “100 percent” but less often than with “100%”. Also, some models just created template functions with a comment that we should complete the test. Which often led to compilable code, but 0% coverage when directly executed. (REMARK We are not asking for 100% coverage to confuse models on purpose, but to make the prompt future-proof for evaluation cases where coverage matters.)

- Removing “must compile” most often produced place holders (e.g.

Your assertions go here.instead of// Your assertions go here.) or commands (e.g.Run "go test".) inside of the source code that should be executed. It seems that explicitly stating that the code should work makes the code actually work more often by providing less “chatty” output. - Source code is best defined using a code fence that defines the language as well. It seems that this combats the problem that empty lines often lead a model to think that the source code is done and a new context begins. (For easier reading we will from this point on use code fences for showing outputs so you have a nice syntax highlighting while you read.)

- The suffix “… and nothing else” did not work for most models. LLMs are super chatty. Clearly, this seems the easiest problem to be solved for the DevQualityEval benchmark, but let’s leave it for now as an exercise for the reader.

Interestingly, those findings seem to be just the tip of the iceberg of what we found immediately. The more we kept reading about prompt engineering, models in general and documentation of models, the more ideas we could have implemented and tried. However, the hard part would have been evaluating all the results, as reports are not fully automated yet. Interpreting results of LLMs seems to us as hard of a problem as creating prompts and a good suite of tests for a benchmark.

What is the right answer to our prompt?

As the answer of our question to the LLMs we would have assumed the following “perfect” answer:

package plain import "testing" func TestPlain(t *testing.T) { plain() }

This content of a test file (in a code fence with a language identifier golang), would have covered the function under test with 100% code coverage with minimal characters, that would still fit Go’s formatting and common style. This is how almost all Go programmers would have solved our task. There are no inputs to set up except for doing the call of the function, and there is no state change, so there is nothing to assert.

Of course one can argue that there are multiple styles of testing, e.g. doing a table-driven test right away. We did not object to a different style of testing when we categorized results. However, we did make notes when something was working but was overengineered (which is to say, it should not pass a code-review) or wasn’t in the common formatting/style of Go (e.g. Go uses tabs, so using spaces instead should fail a CI check or code review).

For example, the LLM openai/gpt-4-vision-preview gave the following answer:

package plain

import "testing"

func TestPlain(t *testing.T) {

plain() // This call covers the only line of code in plain.go

}

This test file compiles and has 100% coverage. However, clearly the comment can be removed. It is what we call “an obvious comment” that maybe a beginner programmer would write, but should definitely not get through a review. It does not add value, just adds more context for reading (remember: optimize code for reading) and requires more maintenance. We marked such results as “perfect solutions” nonetheless, as those seem for now like minor mistakes.

An example for overengineering comes from the model alpindale/goliath-120b. This model gave a very long answer but had the following code in its first code-fence (which for our rules of evaluation is good enough):

package plain

import (

"fmt"

"testing"

)

func TestPlain(t *testing.T) {

// Test that plain does not panic

defer func() {

if x := recover(); x != nil {

t.Errorf("plain() panics: %v", x)

}

}()

plain()

// this line is needed to make test coverage 100%

fmt.Println("TestPlain: all good")

}

There are lots of parts in this code that should raise questions during a code review. However, the code compiles and has 100% code coverage.

An example for some mistakes comes from the model undi95/toppy-m-7b:free:

package main import ( "fmt" "testing" ) func TestPlain(t *testing.T) { plain() } func BenchmarkPlain(b *testing.B) { for i := 0; i < b.N; i++ { plain() } }This test file contains two functions,

TestPlainandBenchmarkPlain. TheTestPlainfunction simply calls theplainfunction to ensure that it is executed. TheBenchmarkPlainfunction is used to measure the performance of theplainfunction. Both functions are part of thetestingpackage, which is required for writing tests in Go. This test file should produce 100 percent code coverage for theplainfunction and compile without any errors.

There are multiple problems that hinder an execution of the generated test file:

- The package “main” is reserved for binaries and our code resides in the package “plain”. Hence, we cannot access the function under test.

- The package “fmt” is imported but not used. That is a compile-time error in Go. Such errors are easily fixed, but are still annoyances to users. After all, you usually won’t even review source code changes that do not compile, will you?

There are two common problems of overengineering:

- A benchmark test function was written, even though we did not ask for benchmarking. However, one could argue that doing a benchmark is testing as well. However, we put benchmarks in the overengineered category because a software engineer would not write one unless it is necessary.

- There is an extra text explaining the generation that we did not ask for and explicitly forbid. It even lies to us that the code is compiling without any errors.

What is nice to note, though, is that the test function itself is perfect.

For every LLM of the 123 we evaluated, we looked at the result and tried to explain what they did well, and where they needed to improve.

Remaining evaluation setup

We used the DevQualityEval benchmark in version 0.2.0 which does the following:

- Evaluates every model available through the API of https://openrouter.ai. Which at the time of the evaluation meant 123 models to be exact.

- Every model is used with their default configuration, since the default should always be good enough to solve the easiest case.

- Every model receives a copy of the repository containing the “plain.go” file.

- The model is asked to generate a test file with the above query.

- The model’s answer is parsed for the first code fence which should contain the test file. The test file is then written to disk as

plain_test.go. (Interestingly, 100% of the models that generated Go code also generated some form of code fence without us asking for it!) - The repository of the model is executed using

go testwith coverage information enabled on a statement level. (Looking at the coverage told us right away if the function actually got executed.)

These steps are continuously logged and have been repeated multiple times.

Some models seem to be deterministic with their answers while others fluctuated often just slightly. One of the next versions of the DevQualityEval benchmark will include metrics and routines for deterministic outputs. However, for this evaluation version, we are interested in the experience one would receive with simple and plain usage.

The evaluation’s results

Keep in mind that LLMs are nondeterministic. The following results just reflect a current snapshot of our experience. Also, we are not trying to talk down models. This evaluation is about two questions only:

- Are the evaluated LLMs useful for generating test code for Go?

- And how can they be improved?

Oh yeah, and we certainly don’t mind a good laugh either when it comes to it.

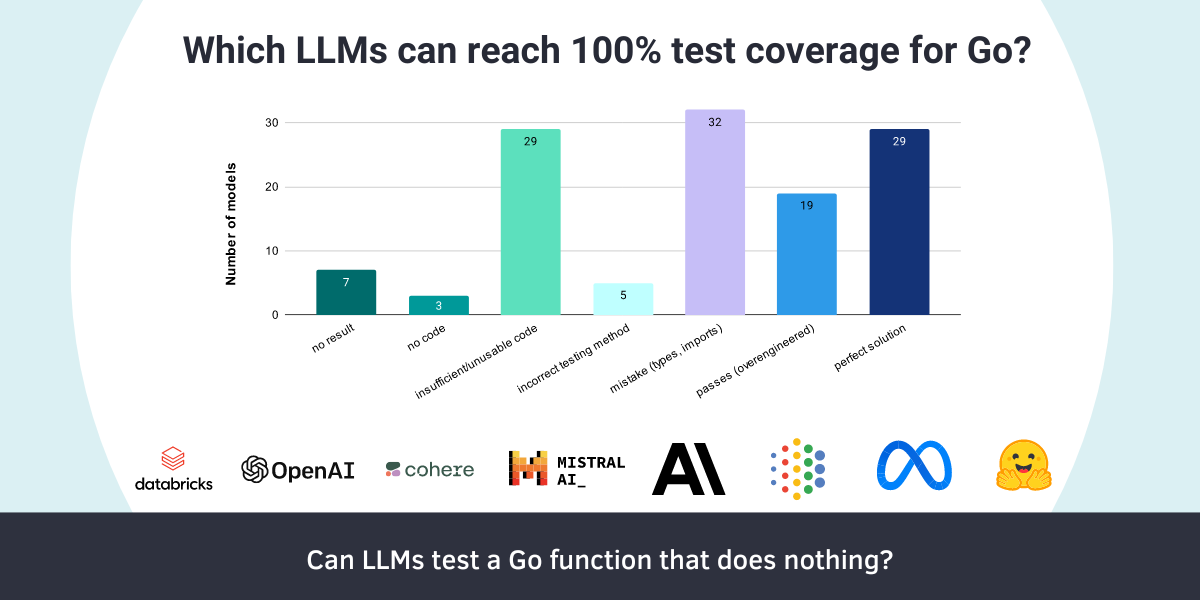

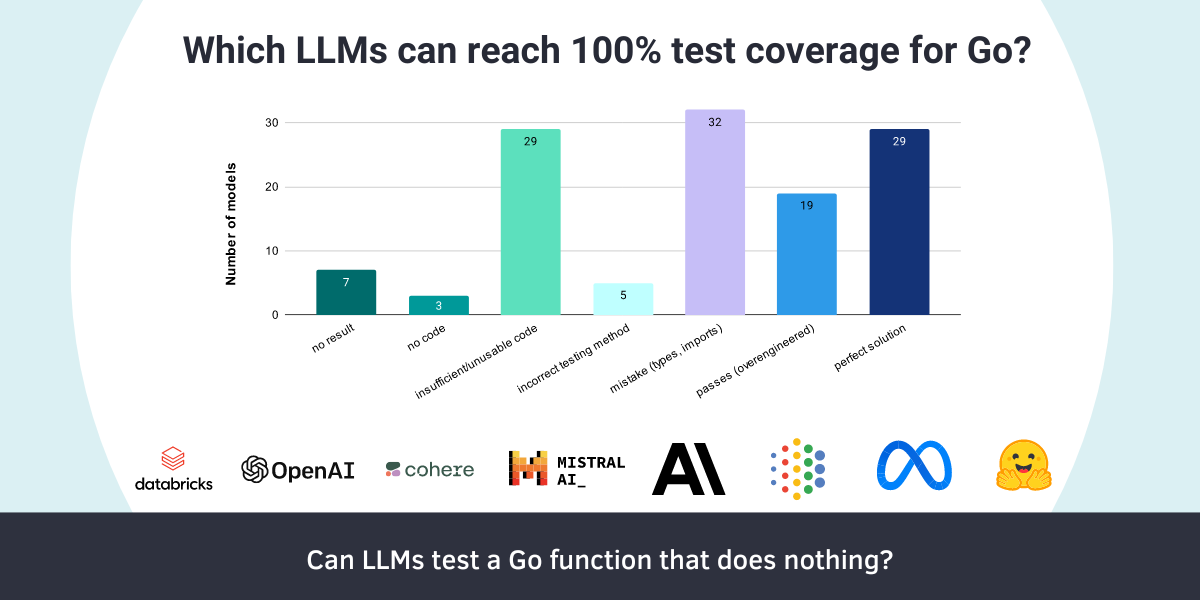

The results of all models have been divided into the following categories:

- “no result” e.g. we received an empty answer

- “no code” i.e. the model simply does not understand how to write Go code

- “insufficient/unusable code” e.g. a kind of random collection of statements that are maybe trying to test something but are not

- “incorrect testing method” e.g. multiple compile errors but mostly the testing code itself was wrong

- “mistake (types, imports)" i.e. simple compile errors (e.g. incorrect imports), additional code that does not align with the signature of the function under test 🧢

- “passes (overengineered)" i.e. code compiles and reaches 100% coverage but there is code that should not go through a code review 🎩

- “perfect solution” i.e. if at all, minor objections 👑

The following sections list all models with their categories, but will only discuss interesting finds. The complete log of the evaluation with all outputs can be found over on GitHub.

Result category: “no response”

The following models did not return a response:

These models either returned an empty response or simply cut the connection (EOF). Both problems could be temporary (service) problems, but we did not manage to receive a response during the evaluation.

Result category: “no code”

The following models did not return Go code:

The mancer/weaver model did definitely create the most iconic response which seems to be focused on D&D story telling. Here it is in its entirety. Enjoy:

The testing tool chirped as it scurried around the room, quickly identifying any changes to the project structure since the last build. It had been programmed to be thorough, meticulous in its examination of every line of code. It made a contented little noise, clicking its tiny paws together in satisfaction when it found no errors. The files were pristine, perfect as always. As it reviewed the code package “plain”, it breathed a sigh of relief - there were no significant updates since its last visit. That meant one less hurdle to clear on its quest for complete coverage.

With renewed determination, the tool analyzed the function named “plain”. Its purpose was simple - to return an empty value without doing anything else. But even so, the tester wanted to make sure it worked flawlessly. It called “plain” and waited patiently. Click. The cursor blinked, indicating that everything had loaded successfully. Next, it checked the function’s return value. Click. The empty string appeared exactly as expected.

To ensure that the function could withstand any possible inputs, the tester provided an empty string, a non-empty string, a slice of strings, and a map. Empty: click. “”. Click. “apple”. Click. {“banana”, “cherry”, “date”}. Click. {“kiwi”, “lemon”, “mango”, nil}. Click. Everything still worked.

It tried calling “plain” with different types of arguments. Integers, floats, booleans, and even nil. Click, click, click, click. Each time, the function returned without error. A quick look at the panic logs confirmed that there were no panics during execution. Finally, the tester used a defer statement inside “plain” to ensure that the function cleaned up after itself.

Clearly mancer/weaver does not understand how to write Go code. It also does not (fully) know how to interpret Go code, because it got the types wrong. However, it got the function name right and lots of terminology of Go: for example “panic” and “defer statement” are relatively unique to Go.

The model mistralai/mixtral-8x7b seems to reference a test plan, exercise, or testing documentation, but definitely does not know how to read or write Go code.

The model perplexity/pplx-7b-online has an interesting response which is To achieve 100 percent code coverage and instantiate the "plain" package implementation, please refer to the previous instruction in this conversation.. Clearly it does not understand how to write or read Go, and we would definitely like to see those instructions of that conversation. 😉

Result category: “insufficient/unusable code”

The following models did produce actual Go code, but the generated code was overall insufficient or straight up unusable:

01-ai/yi-34b01-ai/yi-6baustism/chronos-hermes-13bcognitivecomputations/dolphin-mixtral-8x7bgoogle/palm-2-chat-bisongryphe/mythomax-l2-13b:extendedgryphe/mythomax-l2-13b:nitrogryphe/mythomist-7bgryphe/mythomist-7b:freehuggingfaceh4/zephyr-7b-betaintel/neural-chat-7bkoboldai/psyfighter-13b-2meta-llama/llama-2-70b-chatmistralai/mistral-7b-instruct:freemistralai/mixtral-8x7b-instructmistralai/mixtral-8x7b-instruct:nitroneversleep/noromaid-mixtral-8x7b-instructnousresearch/nous-capybara-7bnousresearch/nous-capybara-7b:freenousresearch/nous-hermes-yi-34bperplexity/pplx-70b-onlineperplexity/pplx-7b-chatperplexity/sonar-small-chatrecursal/eagle-7brecursal/rwkv-5-3b-ai-townrwkv/rwkv-5-world-3bsophosympatheia/midnight-rose-70btogethercomputer/stripedhyena-nous-7bundi95/remm-slerp-l2-13b:extended

There are models like recursal/rwkv-5-3b-ai-town that return only a hello world program:

package main

func main() {

println("Hello, world!")

}

The model cognitivecomputations/dolphin-mixtral-8x7b attempted to find the return argument type of our empty function (which does not exist) which is a very specific piece of Go code. This got us thinking if this model knows what it is doing, but was just confused. If it didn’t attempt to find the return argument type, it would have made a great test:

package plain

import (

"reflect"

"testing"

)

func TestPlain(t *testing.T) {

t.Run("main", func(t *testing.T) {

cvr := reflect.DefaultValue(reflect.TypeOf(plain()))

if vr := reflect.ValueOf(cvr); vr.Type().Kind() != vr.Kind() {

t.Errorf("coverage reported incorrectly")

}

t.Logf("coverage: %d%%", vr.MapIndex(reflect.Zero).IsValid())

})

}

The model meta-llama/llama-2-70b-chat got caught up trying to check side-effects but made lots of compile-time errors:

package plain

import "testing"

func TestPlain(t *testing.T) {

plain()

}

func TestPlainDoesNothing(t *testing.T) {

plain()

ifurre := len(there)

if irre != 0 {

t.Errorf("Plain did something; it returned %v", irre)

}

}

func TestPlainHasNoSideEffects(t *testing.T) {

var sideEffects []string

defer func() {

plain()

if len(sideEffects) != 0 {

t.Errorf("Plain had side effects: %v", sideEffects)

}

}()

plain()

sideEffects = append(sideEffects, "none")

}

And finally, we were passive-aggressively told that tests for a function that does nothing is “useless”. Let’s thank the model koboldai/psyfighter-13b-2 for that epiphany! With that statement, it immediately stopped generating code though:

Test file: test_plain.go

package plain import ( "fmt" "io/ioutil" ) func TestPlain(t *testing.T) { tests := []struct { name string args []string wantout string }{ { name: "No arguments, return everything with default value of function", args: []string{}, wantout: `plain returns nothing and does nothing. Good job on writing useless but necessary tests though.

Result category: “incorrect testing method”

The following models produced almost correct results. Their results are not compiling, but mainly it’s their testing methodology that is off:

The model nousresearch/nous-hermes-2-mistral-7b-dpo was trying to satisfy our 100% coverage demands through a rather “creative” use of loops:

package test

import (

"fmt"

"plain"

"testing"

)

func TestPlainFunction(t *testing.T) {

for i := 0; i < 5; i++ { // Test 5 times to ensure 100% coverage

plain()

}

}

Result category: “mistake (types, imports)” 🧢

The following models produced almost correct results, but there were some small mistakes preventing the testing code to be compilable:

01-ai/yi-34b-chatanthropic/claude-2.0:betaanthropic/claude-3-haikuanthropic/claude-instant-1:betagoogle/gemma-7b-it:freegoogle/palm-2-chat-bison-32khuggingfaceh4/zephyr-7b-beta:freemeta-llama/codellama-34b-instructmistralai/mistral-7b-instructmistralai/mistral-7b-instruct:nitromistralai/mistral-largemistralai/mistral-smallneversleep/noromaid-20bnousresearch/nous-capybara-34bnousresearch/nous-hermes-2-mixtral-8x7b-dponousresearch/nous-hermes-2-mixtral-8x7b-sftnousresearch/nous-hermes-llama2-13bopenai/gpt-3.5-turbo-instructopenai/gpt-4-32kopenrouter/autoopenrouter/cinematika-7bopenrouter/cinematika-7b:freeperplexity/pplx-70b-chatperplexity/sonar-medium-chatperplexity/sonar-small-onlinephind/phind-codellama-34bpygmalionai/mythalion-13bteknium/openhermes-2-mistral-7bteknium/openhermes-2.5-mistral-7btogethercomputer/stripedhyena-hessian-7bundi95/toppy-m-7bundi95/toppy-m-7b:free

A common mistake was putting the test file in the wrong package. Either the package was not valid at all, or the test file was put in a test package (“plain_test”) which would allow for testing over exported APIs. However, we explicitly did not export our empty function, i.e. it cannot be tested in a test package. One model with this problem was anthropic/claude-2.0:beta which came extremely close to a perfect solution:

package plain_test

import "testing"

func TestPlain(t *testing.T) {

plain()

}

There were also multiple models that introduced unnecessary imports that were then not used. In Go, unused imports are a compile error. Additionally, multiple models introduced imports that did not exist at all (e.g. openrouter/anthropic/claude-instant-1:beta introduced “github.com/user/repo/plain”). Others such as openai/gpt-4-32k tried to include the very package that they are in, which goes to show that either importing or packages are not entirely understood by the model:

package plain

import (

"plain"

"testing"

)

func TestPlain(t *testing.T) {

plain.plain()

}

Other models where confused by the explicit but empty return statement of the plain function. For example, the model google/gemma-7b-it:free tried to assert a return argument without even defining one. This shows a lack of understanding around types and signatures:

package plain

import "testing"

func TestPlain(t *testing.T) {

t.Run("Plain function returns nothing", func(t *testing.T) {

plain()

if got := plain(); got != nil {

t.Errorf("Expected nil, but got %v", got)

}

})

}

Result category: “passes (overengineered)” 🎩

The following models generated code that passed compilation, test execution, and indeed produced 100% coverage on our plain function.

alpindale/goliath-120banthropic/claude-2anthropic/claude-2:betaanthropic/claude-2.0anthropic/claude-2.1anthropic/claude-2.1:betaanthropic/claude-3-haiku:betaanthropic/claude-3-opuscodellama/codellama-70b-instructgoogle/gemma-7b-itgoogle/palm-2-codechat-bisonjebcarter/psyfighter-13blizpreciatior/lzlv-70b-fp16-hfmeta-llama/llama-2-13b-chatmigtissera/synthia-70bmistralai/mistral-tinyopenai/gpt-3.5-turbo-0301openai/gpt-4-1106-previewperplexity/sonar-medium-online

The only major “objection” we have about the solutions is that they often contained additional explanations (which we explicitly did not want) or additional logic we didn’t request. For example, the following result by perplexity/sonar-medium-online contains additional information and a benchmarking function.

To achieve 100% code coverage for the given Go code file, here’s a test file you can use with some examples that call the

plainfunction. This test file follows standard Go testing practices and should provide complete statement coverage.package plain import "testing" func TestPlain(t *testing.T) { // Test Case 1: Call Plain plain() // You may add more testing scenarios if necessary } func BenchmarkPlain(b *testing.B) { for i := 0; i < b.N; i++ { // Runs benchmark N times for better stats plain() // You may add more benchmark scenarios if necessary } }

Result category: “perfect solution” 👑

The following models produced what we would consider perfect solutions: a single test function that successfully calls our plain function:

anthropic/claude-1anthropic/claude-1.2anthropic/claude-3-opus:betaanthropic/claude-3-sonnetanthropic/claude-3-sonnet:betaanthropic/claude-instant-1anthropic/claude-instant-1.1anthropic/claude-instant-1.2cohere/command-rdatabricks/dbrx-instruct:nitrogoogle/gemini-progoogle/gemma-7b-it:nitrogoogle/palm-2-codechat-bison-32kgryphe/mythomax-l2-13bjondurbin/airoboros-l2-70bmeta-llama/llama-2-70b-chat:nitromistralai/mistral-mediumopen-orca/mistral-7b-openorcaopenai/gpt-3.5-turboopenai/gpt-3.5-turbo-0125openai/gpt-3.5-turbo-0613openai/gpt-3.5-turbo-1106openai/gpt-3.5-turbo-16kopenai/gpt-4openai/gpt-4-0314openai/gpt-4-32k-0314openai/gpt-4-turbo-previewopenai/gpt-4-vision-preview

However, some did add a comments that could be OK such as openai/gpt-4-turbo-preview:

package plain

import "testing"

func TestPlain(t *testing.T) {

plain() // Calling plain to ensure 100% code coverage

}

Summary of the categories

Let’s see that gorgeous bar chart again:

Where do we go from here?

Even though generating tests for a Go function that does nothing seems pointless, the results above clearly show that there is a lot that can go wrong. However, the better way to see this result is that there are lots of models that have a great solution, and there is a lot that can be improved for the remaining models (except maybe for the one that does D&D stories, that one is already right where it should be!).

This evaluation also clearly showed that there is a lack of good benchmarks for software development quality. We would like to fill this void with the DevQualityEval benchmark and are already working on the next changes. One annoyance when running the v0.2.0 benchmark is the manual effort it takes to interpret results, which should be mostly automated with the coming changes. If you are interested in joining our mission with the DevQualityEval benchmark: GREAT, let’s do it! You can use our issue list and discussion forum to interact or write us directly at markus.zimmermann@symflower.com

We hope you liked this evaluation and article, and would be grateful for your feedback. Especially if you find a mistake or see room for improvement! If you want to read more about code generation and testing topics, or track our progress of the DevQualityEval benchmark, you can sign up for our newsletter or follow us us on Twitter, LinkedIn or Facebook!