This post details the complex statistical and domain-specific scorers that you can use to evaluate the performance of large language models. It also covers the most widely used LLM evaluation frameworks to help you get started with assessing model performance.

The previous post in this series introduces LLM evaluation in general, the types of evaluation benchmarks, and how they work. We also talked about some generic metrics they use to measure LLM performance.

💡 A blog post series on LLM benchmarking

Read all the other posts in Symflower’s series on LLM evaluation, and check out our latest deep dive on the best LLMs for code generation.

Using scorers to evaluate LLMs

In order to evaluate LLMs in a comparable way, you’ll need to use generally applicable and automatically measurable metrics.

(That said, note that human-in-the-loop evaluation is also possible, either for just “vibe checks” or carrying out comprehensive human evaluations. While these are costly and complex to set up, they may be necessary if your goal is a really thorough evaluation.)

In this post, we’ll focus on the standardized metrics you can use to measure and compare the performance of large language models for a given set of tasks.

🤔 What do we mean by “LLM performance”?

Throughout this post, we’ll use “LLM performance” when referring to an estimate of how useful, how helpful an LLM is for a given task. Indicators like tokens per second, latency, etc, and cost metrics (but also other scorers like metrics on user engagement) are certainly useful, but outside the scope of this post.

Symflower’s DevQualityEval takes into account token costs to help select LLMs that produce good test code. Check out our deep dives if you’re looking for a coding LLM that may be useful in a real-life development environment:

- Anthropic’s Claude 3.7 Sonnet is the new king 👑 of code generation (but only with help), and DeepSeek R1 disappoints (Deep dives from the DevQualityEval v1.0)

- OpenAI’s o1-preview is the king 👑 of code generation but is super slow and expensive (Deep dives from the DevQualityEval v0.6)

- DeepSeek v2 Coder and Claude 3.5 Sonnet are more cost-effective at code generation than GPT-4o! (Deep dives from the DevQualityEval v0.5.0)

- Is Llama-3 better than GPT-4 for generating tests? And other deep dives of the DevQualityEval v0.4.0

- Can LLMs test a Go function that does nothing?

LLM evaluation metrics fall into either of two main categories:

- Supervised metrics: Used when reference labels (e.g. a ground truth, in other words, an expected correct answer) are available. Asking an LLM to add 2 and 2 only has one correct answer.

- Unsupervised metrics: When there’s no ground truth label, unsupervised metrics can be used. These are generally harder to calculate and potentially less meaningful. If you ask an LLM to write a pretty poem, how are you going to score how good the poem is? This category includes metrics such as perplexity, length of the response, etc.

Supervised metrics are favored because they are the easiest to handle. Either the model response corresponds to the correct solution or it does not, simple as that. Some parsing and/or prompt tweaking may be required to extract the correct answer from the text that an LLM produces, but the scoring itself is very straightforward.

Unsupervised metrics are more challenging because they are not just divisible into “black and white”. And because our human world is usually not just “black and white”, we will focus on these metrics for the remaining blog post.

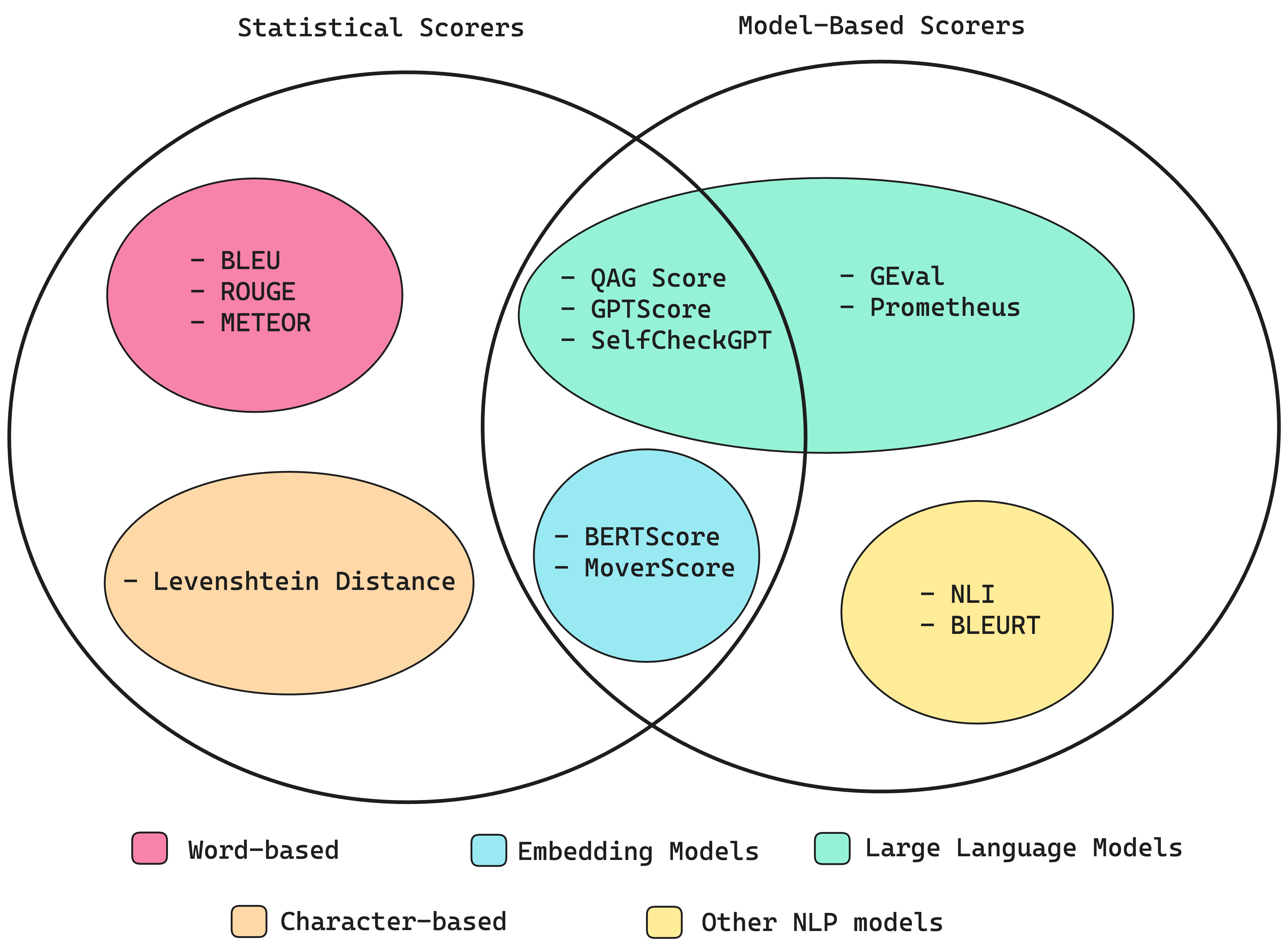

Most often, you’ll be relying on two key categories of unsupervised metrics when evaluating LLMs:

- Statistical scorers: Used to analyze LLM performance based on purely statistical methods that apply calculations to measure the delta between actual vs expected/acceptable output by the LLM. These methods are considered suboptimal in cases where reasoning is required or when evaluating long and complex LLM outputs since such metrics don’t excel at considering semantics.

- Model-based scorers for LLM-assisted evaluation: These scorers rely on another LLM (e.g. GPT-4) to calculate the scores of the tested LLM’s output (e.g. the “AI evaluating AI” scenario). While it’s faster and obviously more cost-effective than using manual (human) evaluation, this kind of evaluation can be unreliable because of the nondeterministic nature of LLMs. It has been recently shown that AI evaluators can be biased towards their own responses.

We’ll provide examples of both in the sections below.

When evaluating LLMs, you’ll want to carefully choose the performance indicators that best fit the context and goals of your assessment. Depending on the intended application scenario for the LLM (e.g. summarization, conversation, coding, etc.) you’ll want to pick different metrics. Due to their nature, LLMs are particularly good at processing and generating text. Therefore, many metrics exist for this application domain. More “exotic” scorers exist for other domains, though this is out of the scope of this blog post.

Here are the main areas for LLM evaluation (e.g. the tasks based on which you assess the performance of LLMs) and some commonly used metrics for each:

- Summarization: Summarizing a piece of input text in a shorter format while retaining its main points. (Metrics: BLEU, ROUGE, ROUGE-N, ROUGE-L, METEOR, BERTScore, MoverScore, SUPERT, BLANC, FactCC)

- Question answering: Finding the answer to a question in the input text. (Metrics: QAEval, QAFactEval, QuestEval)

- Translation: Translating text from one language to another. (Metrics: BLEU, METEOR

- Named Entity Recognition (NER): Identifying and grouping named entities (e.g. people, dates, locations) in the input text. (Metrics: InterpretEval, Classification metrics e.g. precision, recall, accuracy, etc.)

- Grammatical tagging: Also known as part-of-speech (POS) tagging, this task has the LLM identify and append the input text (words in a sentence) with grammatical tags (e.g. noun, verb, adjective). -Sentiment analysis: Identifying and classifying the emotions expressed in the input text. (Metrics: precision, recall, F1 score)

- Parsing: Classifying and extracting structured data from text by analyzing its syntactic structure and identifying its grammatical components. (Metrics: Spider, SParC)

In the next section, we’re introducing some of the most commonly used scorers.

Statistical scorers used in model evaluation

- BLEU (BiLingual Evaluation Understudy): This scorer measures the precision of matching n-grams (sequences of n consecutive words) in the output vs the expected ground truth. It’s commonly used to assess the quality of translation with an LLM. In some cases, a penalty for brevity may be applied.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): This scorer is mostly used to evaluate the summarization and translation performance of models. ROUGE measures recall e.g. how much content from one or more references is actually contained in the LLM’s output. ROUGE has multiple variants e.g. ROUGE-1, ROUGE-2, ROUGE-L, etc.

- METEOR (Metric for Evaluation of Translation with Explicit Ordering): Mostly used for evaluating translations, METEOR is a bit more comprehensive as it assesses both precision (n-gram matches) and recall (n-gram overlaps). It is based on a generalized concept of unigram (single word-based) matching between output (e.g. the LLM’s translation) and the reference text (e.g. the human-produced translation).

- Levenshtein distance or edit distance: Used to assess spelling corrections among others. This scorer calculates the edit distance between the input and output e.g. the minimum number of single-character edits required to change the output text into the input text.

Model-based scorers in LLM evaluation

LLM-based evaluations can be more accurate than statistical scorers, but due to the probabilistic nature of LLMs, reliability can be an issue. With that said, a range of model-based scorers is available, including:

- BLEURT (Bilingual Evaluation Understudy with Representations from Transformers): BLEURT is based on transfer learning to assess natural language generation while considering linguistic diversity. As a complex scorer, it evaluates how fluent the output is and how accurately it conveys the meaning of the reference text. Transfer learning means, in this case, that a pre-trained BERT model (see below) is further pre-trained on a set of synthetic data, and then trained on human annotations before running BLEURT.

- NLI (Natural Language Inference) aka Recognizing Textual Entailment (RTE): This scorer determines whether the output is logically consistent with the input (entailment), contradicts it, or is unrelated (neutral) to the other. Generally, 1 means entailment while values near 0 represent contradiction.

Combined statistical & model-based scorers

You can combine the two types to balance out the shortcomings of both statistical and model-based scorers. A variety of metrics use this approach, including:

- BERTScore: BERTScore is an automatic evaluation metric for text generation that relies on pre-trained language models (e.g. BERT). This metric has been proven to correlate with human evaluation (based on the sentence and system level).

- MoverScore: Similarly based on BERT, the MoverScore can be used to assess the similarity between a pair of sentences that are written in the same language. Specifically, it’s useful in tasks where there may be multiple ways to convey the same meaning, without having the exact wording fully similar. It shows a high correlation with human judgment on the quality of text generated by LLMs.

- Other metrics like SelfCheckGPT are available to i.e. check the output of an LLM for hallucinations.

- Finally, Question Answer Generation (QAG) fully leverages automation in LLM evaluation by using yes-no questions that can be generated by another model

LLM evaluation frameworks vs benchmarks

Considering the number and complexity of the above metrics (and their combinations that you’ll want to use for comprehensive evaluation), testing LLM performance can be a challenge. Tools like evaluation frameworks and benchmarks help you run LLM assessments with selected metrics.

Let’s get the basics down first.

🤔 What’s the difference between LLM evaluation frameworks and benchmarks?

- Frameworks are great toolkits for conducting LLM evaluations with custom configurations, metrics, etc.

- Benchmarks are standardized tests (e.g. sets of predefined tasks, metrics, ground truths) that provide comparable results for a variety of models.

Think of how many seconds it takes a sports car to reach 100 km/h. That is a benchmark with which you can compare different models and brands. But to obtain that numerical value, you’ll have to deal with all the equipment (i.e. a precise stopwatch, a straight section of road, and a fast car to measure). That’s what a framework provides. We’re listing a few of the most popular evaluation frameworks below.

Top LLM evaluation frameworks

DeepEval

DeepEval is a very popular open-source framework. It is easy to use, flexible, and provides built-in metrics including:

- G-Eval

- Summarization

- Answer Relevancy

- Faithfulness

- Contextual Recall

- Contextual Precision

- RAGAS

- Hallucination

- Toxicity

- Bias

DeepEval also lets you create custom metrics and offers CI/CD integration for convenient evaluation. The framework includes popular LLM benchmark datasets and configurations (including MMLU, HellaSwag, DROP, BIG-Bench Hard, TruthfulQA, HumanEval, GSM8K).

Giskard

Giskard is also open-source. It’s a Python-based framework that you can use to detect performance, bias & security issues in your AI applications. It automatically detects problems including hallucinations, the generation of harmful content or disclosing sensitive information, prompt injection, issues around robustness, etc. One neat thing about Giskard is that it comes with a RAG Evaluation Toolkit specifically for testing Retrieval Augmented Generation (RAG) applications.

Giskard is a flexible choice that works with all models and environments and integrates with popular tools.

promptfoo

Another open-source solution, promptfoo lets you test LLM applications locally. It’s a language agnostic framework that offers caching, concurrency, and live reloading for faster evaluations.

Promptfoo lets you use a variety of models including OpenAI, Anthropic, Azure, Google, HuggingFace, and open-source models like Llama. It provides detailed and directly actionable results in an easy-to-overview matrix layout. An API makes it easy to work with promptfoo.

LangFuse

LangFuse is another open-source framework that’s free for use by hobbyists. It provides tracing, evaluation, prompt management, and metrics. LangFuse is model and framework agnostic, and integrates with LlamaIndex, Langchain, OpenAI SDK, LiteLLM & more, and also offers API access.

Eleuther AI

Eleuther AI is one of the most comprehensive (and therefore popular) frameworks. It Includes 200+ evaluation tasks and 60+ benchmarks. The framework supports the use of custom prompts and evaluation metrics, as well as local models and benchmarks to cover all your evaluation needs.

A key point to prove the value of Eleuther AI: it is the framework that powers Hugging Face’s popular Open LLM Leaderboard.

RAGAs (RAG Assessment)

RAGAs is a framework designed for evaluating RAG (Retrieval Augmented Generation) pipelines. (RAG uses external data to improve context for LLM).

The framework focuses on core metrics including faithfulness, contextual relevancy, answer relevancy, contextual recall, and contextual precision. It provides all the tools that are necessary for evaluating LLM-generated text. You can integrate RAGAs into your CI/CD pipeline to provide continuous checks on your models.

Weights & Biases

In addition to evaluating LLM applications, a key benefit of Weights & Biases' solution is that you can use it for training, fine-tuning, and managing models. It’s also useful for spotting regressions, visualizing results, and sharing them with others.

Despite consisting of multiple modules (W&B Models, W&B Weave, W&B Core), its developers claim you can set the system up in 5 minutes.

Azure AI Studio

Microsoft’s Azure AI Studio is an all-in-one hub for creating, assessing, and deploying AI models. It lets you visualize results for an easy overview, helping you pick the right AI model for your needs. Azure AI Studio also provides a control center that helps optimize and troubleshoot models. It’s good to know that this solution supports no-code, low-code, and pro-code use cases, so LLM enthusiasts with any level of expertise can get started with it.

Summary: key LLM evaluation metrics & frameworks

We hope this description of the complex metrics used in LLM evaluation gives you a better understanding of what scorers you’ll have to watch for when evaluating models for your specific use case. If you’re ready to start assessing LLM performance, the above evaluation frameworks will help you get started.

Looking for an LLM to generate software code? Don’t miss the next part of this series which provides an overview of the most popular LLM benchmarks for generating software code: