We cover the strategies that help you control costs when using Large Language Models to generate text or software code.

Integrating LLMs into your workflow can open up a wide range of use cases where there is a need to generate content. But the help LLMs provide doesn’t come for free, especially if you use tools that rely on external third-party LLM inference APIs.

This post focuses on managing the costs of text generation with LLMs in general. Still, the insights below should be beneficial for software developers looking to enhance a range of tasks like code generation, generating documentation, creating tests, managing projects, and more. We will look closer at these use cases, where instead of purchasing a full off-the-shelf product (like GitHub Copilot), you use custom tools where the main cost driver is the LLM powering the solution. The following tips for managing LLM costs can help maintain cost efficiency as you improve your productivity with LLMs.

👑 Looking for the best LLM tools to support your coding?

Read our posts:

The cost factors of using Large Language Models

The main cost factor contributing to the eventual cost of using LLM-based tools in your workflow is the cost of using the Large Language Model itself. You can either host models locally (or via a private server) or you can use cloud providers and pay by computational consumption e.g. the number of tokens used (think of it as the SaaS model in LLMs).

Self-hosted LLM

The main cost associated with this option is the upfront cost of setting up the necessary infrastructure (network) and computing resources (GPUs). There may also be licensing fees involved (although most closed-weight models aren’t available for self-hosting). There are some cool projects emerging in this field, like exo which enables you to combine multiple devices into one powerful inference cluster without much hassle. The upside of going the self-hosted way is that the privacy of your data is ensured. The downside is the upfront investment in hardware, as well as the ongoing maintenance costs.

Token-based pricing

In a pay-per-token pricing model (i.e. when using a cloud provider), you’ll pay by the amount of token data processed and produced by your LLM of choice. Tokens are the currency in the world of LLM. Large Language Models are based on neural networks that treat data in a numerical way, but text is not numeric. Hence, a translation needs to happen. Tokens represent translated sequences of text (or code) that the LLM can process. Based on the tokenization method, a token could mean characters, portions of words, or entire words. In the English language, an average token is 0.75 words or four characters.

🤔 How do tokens work?

Input prompts have to be converted to a vector format before running them through the LLM to get an output, and that output has to be converted back to text. The more input tokens an LLM has to process, or the more output tokens it has to produce, the more computational power is required. You are paying based on how much text the LLM has to process and produce, but the cost is not calculated per character or word, but per token.

Different vendors may calculate tokens differently, and pricing may vary for input (prompt) or output (completion) tokens, with completion tokens being generally more expensive. Also, special characters cost extra. English-language prompts and output take the fewest tokens. The upside of token-based pricing is that it provides a flexibly scalable system. The downside, of course, is that it can get quite costly.

Make sure to check out the policy of the LLM provider of your choice to understand their tokenization method. You’ll find a variety of online LLM token counters to give you an idea of how many tokens a given prompt is going to mean. The LLM Token Counter is one web application that lets you select specific models to check how many (input) tokens your prompt is going to consume. Some LLM developers also provide their own token counters, like OpenAI’s Tokenizer.

Such calculators help you develop an intuition of the token costs of your input (prompts). But the size (= amount of tokens) of the generated output will still be unknown because it is not possible to predict what the LLM is going to produce. Using agent libraries can also affect tokens as they may add additional context to a prompt, adding tokens (and hence cost) without you knowing.

The bottom line is that even though tokenization makes the pricing logic a bit more challenging, there isn’t really any way around it. LLMs use tokens as a fundamental input/output unit, so it makes sense to adapt pricing to that. Also, it is worth mentioning that an LLM and its tokenizer (i.e. translator) only work together and usually cannot be separated, so when choosing an LLM, you are stuck with whatever tokenization method was used during the training of that LLM.

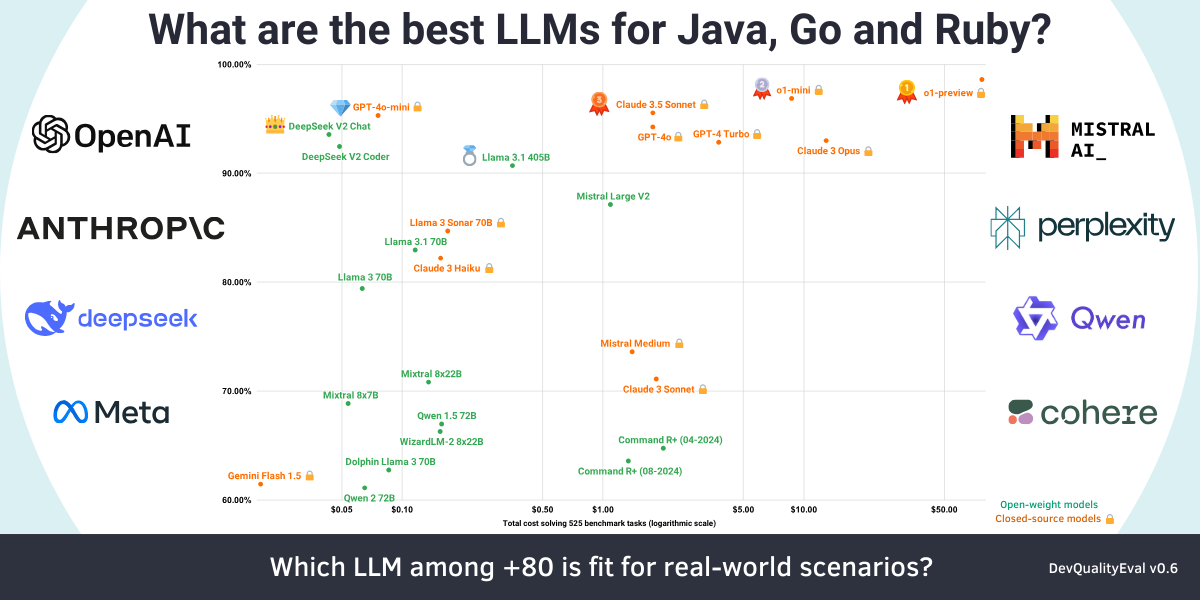

It can pay off to compare the prices of different providers and models, as the costs can span from i.e. $0.42 per 1 million tokens (for DeepSeek’s Chat/Coder models) to a whopping $18 per 1 million tokens (for Anthropic’s Claude Sonnet 3.5). That is a factor 43x difference at the time of writing (late Aug 2024). Given that we found both models to have similar capabilities for software development tasks in our DevQualityEval benchmark, it really pays off to invest some time into finding the model that best suits your use case (see more on that below). For up-to-date results, check out the latest DevQualityEval deep dive.

Managing LLM spending: best practices to optimize LLM costs

There are a few ways to reduce the costs of using Large Language Models in your workflow.

Finding the right model & reducing LLM size

LLM size (the number of parameters) is a big cost factor and it also greatly affects performance. You will deal with model size most likely when running models locally, as cloud providers usually abstract this factor away by adjusting the price accordingly. Not everyone has a few hundred GB of GPU memory to casually run the biggest Llama 3.1 405B model.

Aim to strike the right balance between model accuracy and costs. It’s a good idea to test multiple models at the same time, and then choose the smallest model that delivers the required performance for a given task.

🦾 DevQualityEval: an LLM benchmark focused on code generation

Check out our deep dives:

- Anthropic’s Claude 3.7 Sonnet is the new king 👑 of code generation (but only with help), and DeepSeek R1 disappoints (Deep dives from the DevQualityEval v1.0)

- OpenAI’s o1-preview is the king 👑 of code generation but is super slow and expensive (Deep dives from the DevQualityEval v0.6)

- DeepSeek v2 Coder and Claude 3.5 Sonnet are more cost-effective at code generation than GPT-4o! (Deep dives from the DevQualityEval v0.5.0)

- Is Llama-3 better than GPT-4 for generating tests? And other deep dives of the DevQualityEval v0.4.0

- Can LLMs test a Go function that does nothing?

While larger models usually give better results, smaller models tend to cost a fraction of larger models. For instance, here are the differences in pricing for GPT-4o vs GPT-4o mini at the time of writing (early Sep 2024):

- GPT-4o mini: $0.75 per 1 million tokens

- GPT-4o: $20.00 per 1 million tokens

There is an over 30x price difference, suggesting it really pays to optimize your use of LLMs.

Quantization

Quantization is a conversion technique that helps reduce the size of locally run models by reducing the precision of model weights. Quantization also helps the model run faster, enabling you to use less powerful hardware and less memory. It moderately reduces the model’s quality, but in turn it can result in significant cost savings. Many local inference tools like Ollama support different quantization settings out of the box. Learn more about LLM quantization in this post.

Fine-tuning

When working with open-weight models, fine-tuning them for a specific task is another possibility to convert a smaller, weaker model into an expert model in one specific area. Projects like unsloth make fine-tuning local models more approachable, but note that it’s still a non-trivial task. There are even offers from closed-weight providers (e.g. OpenAI) to fine-tune their models on custom data, but since the training is done on their hardware, this procedure doesn’t come cheap.

Distillation

Distillation is another technique that involves teaching a smaller model (the student) to mimic the outputs of a larger pre-trained model (the teacher). Distillation can be considered an advanced technique since it requires training a new model from scratch.

🤓 Curious about LLM benchmarking?

Check out the series:

LLM observability: monitoring token use

First, you’ll need to understand where you’re at with your current practices of using LLM in your daily workflow. Use a tool to monitor the token use of your queries. There are a range of LLM monitoring and observability tools (both open-source and paid) to help you track token use as well as other characteristics of how your models work. When you are using a cloud provider (such as Openrouter), you should also be able to find your current usage and costs in their billing section.

In addition to tracking token use, establishing observability to implement adequate monitoring of your models also helps optimize performance in many other ways:

- Reducing hallucinations

- Enhancing prompt efficiency

- Faster diagnosis of issues

- Increased security

👀 LLM observability tools

Implementing observability may be an overkill for small projects. But it sure is helpful for large ones. Read up on the basics of LLM observability & a list of the most useful tools: LLM observability: tools for monitoring Large Language Models

Applying prompt engineering to optimize prompts

Prompts (the instructions you provide to the LLM) determine what kind of output you’ll get from your model. Prompt engineering refers to the practice of meticulously designing the prompts that produce optimal outputs that better suit your intents.

Even minor adjustments to your prompts can result in significant improvements in the generated responses. Better prompts can help reduce hallucinations and improve quality. Techniques like chain-of-thought prompting (guiding the LLM through the logical process of generating useful output) can positively impact results but also lead to increased costs.

You’ll have to strike the right balance between getting accurate, useful responses and reducing data input (input tokens) to the model. You’ll want your prompts to be as brief as possible, compressing them while maintaining the usefulness of the output. Practices like explicitly limiting the maximum length of the output in the system prompt help cut costs. Make sure your prompt doesn’t contain any unnecessary information. You may also want to consider consolidating multiple prompts into one or asking the LLM to provide a single file that you can dissect and analyze for your purposes.

LLMLingua is an open-source tool by Microsoft that claims it can automatically “compress” prompts by a factor of 20x by removing redundant information using a small LLM trained specifically for that task.

🤪 Prompting quirks

We found that requiring “100 percent” code coverage performed better than “100 %” in our prompts. Interesting, right? Learn about other prompt engineering quirks in the first deep dive into our DevQualityEval benchmark results: Can LLMs test a Go function that does nothing?

Using Retrieval-Augmented Generation

As a simple rule, the more input context you provide as part of your prompt, the better the responses will be. But simply filling the whole context window (e.g. the maximum amount of input content the LLM can process at once) may be helpful but very expensive. The point is to strike the right balance by providing just enough context.

Retrieval-Augmented Generation (RAG) refers to a group of techniques with which you can help a model perform better for your intended use case while driving down costs by providing just the right context:

- Distilled prompt context: Adding pre-processed information to your prompt can already improve the LLM output. Again, just be careful not to overshoot by adding too much information. Experiment with what is enough to get good results. If you’re using an LLM-backed tool, this likely happens already. For example, the coding agent Aider adds a simplified overview of the codebase called a “repo map” to each prompt to help the LLM understand how the code is composed.

- Embedding Databases: An Embedding Database is an additional knowledge resource in the form of an (external) database so the LLM has access to lots of (up-to-date) information. It’s a cost-efficient and scalable way of enhancing LLM performance. This again is quite a complex endeavor to set up because it needs to be tightly integrated with the used LLM.

Both methods should have a positive impact on the usefulness of LLM output. However, both require time and effort investment (with setting up an Embedding Database being the more costly option). Another good approach is having a second (weaker) model summarize your conversation history with the main LLM, then feeding that back as context for subsequent queries. This reduces the context size and, for example, is available in LangChain.

💡 Enhancing code generation with LLMs

Learn about using Symflower for LLMs to augment prompt engineering, provide higher quality training data, improve LLM outputs, and evaluate the quality of code generated by LLMs.

Reusing context: prompt and response caching

Caching has a huge potential to reduce your LLM costs, but it needs to be supported by the LLM provider in use (or the local LLM setup).

- Prompt caching: The idea is that if your prompt (or parts of it) does not change, then the internal state of the neural network behind the LLM can be saved after processing your prompt. It can then be restored when the same prompt is encountered again in the future, saving costly computation time. It might seem odd at first to pass similar prompts repeatedly to a model, as a one-time answer is usually enough. But especially code-generation applications can benefit from these caching mechanisms because large portions of input code usually stay unchanged. LLMs from Anthropic already support this feature out-of the-box at the time of writing.

- Response caching: With response caching, you’ll store the output previously generated by the model and reuse it for relevant queries. This helps avoid redundant requests, contributing to time and cost savings. You’ll start by setting up a caching mechanism to store generated responses. Any time a request is made, you’ll search this cached repository first, and if there’s a match, you’ll just provide that existing response instead of having the LLM generate a new one. Naturally, there’s some overhead to creating this cache, but it could help you save costs in the long run (especially if you’re managing lots of users and you expect similar questions to come up regularly). This addition pays off the most if you have many similar queries to an LLM, for example in an organizational internal “help chatbot”. A project worth looking into for semantic response caching is GPTCache.

Batching requests

You can send queries to the LLM in batches instead of individual requests. This makes output generation faster and should result in some savings, mostly if you’re hosting LLMs yourself. Each API call comes with some overhead that you can shave off by grouping requests together. In addition to that, it also allows LLMs to make better use of computational resources by parallelizing processes. Even some commercial cloud providers provide special batch APIs for reduced pricing. For example, OpenAI grants a 50% discount for batched API calls – with a catch: the results are to be returned within at most 24 hours. This can be worth looking into if your use case does not require real-time responses.

Using dynamic token management

In addition to (or in place of) static limitations on tokens (e.g. using token quotas for certain tasks), you can also implement dynamic measures to optimize the use of tokens. This is typically done by dedicated token management systems that monitor the way LLMs are used and adapt token allocation as needed. Techniques include token rate limiting, token pooling, and token throttling. In addition to managing costs, dynamic token management can also positively impact performance.

Early stopping

The idea is to set up a manual or automated way to monitor LLM operations and halt generation as soon as you realize the LLM is not generating the output you need. As simple as this solution is, it can help save a lot of resources (not only costs but also time).

FinOps and using cost management tools

FinOps refers to the principles of “Finance” and “DevOps” coming together. This discipline emphasizes the importance of collaboration between business and technical teams working together to minimize costs.

Originally aimed at cloud computing in general, FinOps represents both a cultural shift in the organization as well as a practical framework that helps extend financial governance to the variable costs of using LLMs. Tools like Microsoft Cost Management help monitor & report on LLM use, suggest cost optimization improvements, and gain visibility and accountability into how your LLM costs add up. FinOps techniques include the gamification of cost awareness, setting up cost-efficiency hackathons internally in the organization, and using AI-powered assistants to help optimize costs.

Summary: managing and reducing LLM costs

Knowing your way around models and using AI-powered tools in the most cost-efficient way possible provides a competitive advantage in this LLM-empowered world, especially in a development environment. The above techniques help cut the costs of using LLMs in any deployed domain.

Let us know if you think we’re missing something! Send us a message at hello@symflower.com.

If your goal is to reduce costs in LLM-enabled software development, stay on top of the latest benchmarks evaluating models for such use cases. Remember, the “best model” is not necessarily the best for your programming language, framework, or use case.