This post covers the use of Aider, a generative LLM-powered software engineering tool, to generate software code.

🦾 Better LLMs for software development

Symflower helps you build better software by pairing static, dynamic and symbolic analyses with LLMs. The robustness of deterministic analyses combined with the creativity of LLMs allows for higher quality and rapid software development. Learn more.

Table of contents:

- LLM tools for software development

- What is Aider?

- What LLMs does Aider support?

- Features: how Aider can help you code

- How to start using Aider

- Controlling costs

- Generating testing code with Symflower

LLM tools for software development

Lots of developers use large language models (LLMs) in software development to make them more productive. AI can accelerate coding through code completion and code generation, review and testing, transpiling code, and generating documentation.

What is Aider?

Aider is a free, open-source AI-powered pair programmer that you can use to edit code in your local Git repository. It’s a command line chat tool that enables you to write and edit code using the large language model of your choice. Aider also offers an experimental UI so that you can access it in your browser.

The tool creates a “map” (essentially a collection of signatures) of your entire repository to give the LLM an understanding of your codebase so that it can provide context-aware suggestions.

Aider works with most popular languages including Python, JavaScript, TypeScript, Java, PHP, HTML, CSS, Ruby, Rust, and more. Find the full range of supported languages here supported languages here.

What LLMs does Aider support?

The tool connects to almost any LLM, but its developer claims it works best with GPT-4o & Claude 3.5 Sonnet. When using any LLM model, make sure you track the amount of LLM tokens used as this greatly influences the costs (see more on this below).

Aider’s developer suggests the tool works optimally with the following models:

If you’re looking to save costs, the tool also works with a range of free AI providers including Google’s Gemini 1.5 Pro and Llama 3 70B on Groq. Cohere’s Command-R+ model works for simple coding tasks.

You can also use local models through Ollama or any other model that can be queried via the OpenAI API.

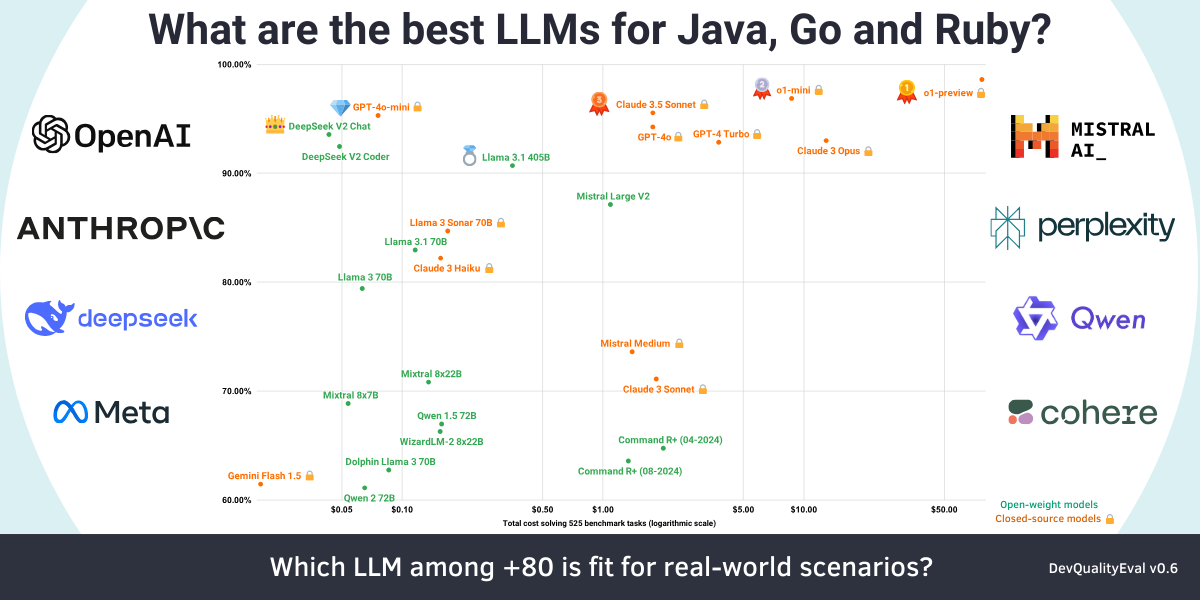

Aider has its own LLM leaderboards for you to check which model is currently considered best to work with Aider. Make sure you also check out the DevQualityEval leaderboard which compares the most popular models across a range of categories and a variety of functional and non-functional metrics.

💡 A blog post series on LLM benchmarking

Read all the other posts in Symflower’s series on LLM evaluation, and see our latest deep dive on the best LLMs for code generation:

Features: how Aider can help you code

Aider enables real-time collaboration with ChatGPT or other LLMs in your CLI. It can explain your code, add new features, find and fix bugs, refactor code, add test cases, and update the documentation - and all of that across multiple files if necessary.

You can paste web links to documentation or GitHub issues into the Aider chat, and it will automatically scrape that web page and use it as an additional knowledge resource for the LLM.

One neat thing about Aider is that it will apply edits directly to the source files, and automatically create Git commits with the right commit messages so you can easily undo any changes it has done (this feature can be turned off).

Prompt caching (for models that support it, including Anthropic) can be very helpful in controlling LLM costs when working with Aider. Using prompt caching lets you provide the model with a large amount of context when you start working with the model. You can then just refer back to that input with your subsequent prompts. Anthropic claims this can help reduce costs by up to 90%.

How to start using Aider

Installing Aider

Installing Aider is super easy:

pip install aider-chat

If you don’t want to do a local install, Aider can be run in a Docker container:

docker pull paulgauthier/aider

docker run -it --volume $(pwd):/app paulgauthier/aider --openai-api-key $OPENAI_API_KEY [...other aider args...]

You can also use Aider in GitHub Codespaces.

Creating a configuration file and configuring an LLM

Aider can be configured using command line arguments, with a YAML config file, or by setting variables in your shell environment (or via an `.env` file). Not all configuration options are available via the YAML file, so we recommend starting out with an .env file if you want a single location containing all of your setup.

You can configure general Aider options and store API keys and other settings for the models you’re using with Aider. Note that to get started, you should at least provide a valid API key and an LLM model to be used. Place this .env file in your home directory, the root of your Git repository, the current directory, or just use the following parameter to specify a location:

--env-file <filename>

You’ll find a sample `.env` file in Aider's documentation.

Using Aider to write software code

Run the command aider with the source files you’re about to edit, or name them to have Aider create new files. Pro tip: don’t add any extra files to the chat session, only the ones that you need edited! Aider will automatically pull content from related files to give the LLM the necessary context to help your coding.

From here on, you can just use natural language prompts to ask Aider for code changes in your files. There’s a wide range of commands you can use, including:

/add: Add files to the chat so it can edit them or review them in detail/drop: Remove files from the chat session to free up context space/commit: Commit edits to the repo made outside the chat (commit message optional)/diff: Display the diff of the last aider commit/lint: Lint and fix provided files or in-chat files if none provided/model: Switch to a new LLM/tokens: Report on the number of tokens used by the current chat context/undo: Undo the last git commit if it was done by aider/voice: Record and transcribe voice input

Source: aider.chat

The last command is especially interesting as it allows you to code using your voice. You can also add images to the chat, and use a range of keybindings to make work with Aider faster. Additionally, you can point Aider to a CONVENTIONS.md file which defines coding conventions in Markdown, and Aider will forward these conventions to the currently used LLM.

You can even configure Aider to automatically run a linter or a test suite on the changes made by the LLM to verify everything is still working. Aider will even send any identified errors back to the LLM to give it a chance to fix them.

You’ll find a variety of tutorial videos in Aider's documentation to help you get started.

Controlling costs

We’d say the biggest drawback of Aider is that you have to track and optimize the costs yourself. There is no one-off fee (as is the case with GitHub Copilot for example) but it’s your responsibility to select the LLM to use and to use the LLM context sparsely.

To reduce the costs during programming sessions, you should:

- Select an LLM with a good cost-performance ratio (i.e. select the cheapest model that is just “good enough” for the complexity of your code). Our own DevQualityEval benchmark can help you make this decision.

- Make sure to use a model that supports a diff-like editing format (basically the model responds only with the parts of the code that it wants to change, and not the whole file). Check out the Aider leaderboards for a table of which LLMs work best with which edit format.

- Make frequent use of the Aider chat commands

/drop <files…>and/clearto remove files that you know are not needed anymore to prune the context. You can use/tokensto check how much context size Aider is currently using.

💸 Find out how to better control LLM costs

Read our post: Managing the costs of LLMs

If you don’t want to use a cloud-based LLM provider (for privacy or cost reasons) but happen to have a decent GPU lying around, you can pair Aider with Ollama to run LLMs entirely locally and create an offline AI coding buddy.

How good is Aider at generating software code?

Its developer claims Aider is a top scorer on the SWE-bench Lite benchmark. At the time of writing, Aider (specifically, Aider + GPT 4o & Claude 3 Opus) is 14th on this leaderboard.

However, on 2 June 2024, Aider’s developer reported that the tool scored 18.9% on the main SWE Bench benchmark, and scored 26.3% on the SWE Bench Lite benchmark (pass@1 result). That score is higher than that of the previous leader, the Amazon Q Developer Agent, and both were state-of-the-art results at the time.

👀 What are the best LLMs for coding?

Check out our deep dives of the DevQualityEval benchmark and framework designed to compare and evolve the quality of code generation of LLMs:

- Anthropic’s Claude 3.7 Sonnet is the new king 👑 of code generation (but only with help), and DeepSeek R1 disappoints (Deep dives from the DevQualityEval v1.0)

- OpenAI’s o1-preview is the king 👑 of code generation but is super slow and expensive (Deep dives from the DevQualityEval v0.6)

- DeepSeek v2 Coder and Claude 3.5 Sonnet are more cost-effective at code generation than GPT-4o! (Deep dives from the DevQualityEval v0.5.0)

- Is Llama-3 better than GPT-4 for generating tests? And other deep dives of the DevQualityEval v0.4.0

- Can LLMs test a Go function that does nothing?

From our own experience, Aider is a very solid AI pair programmer that can do everything you would expect from an AI coding assistant. It is simple to use yet very powerful and provides some clever features like automatic web scraping, convention specifications, verifying LLM changes with linters or tests, and voice coding.

Using it from the CLI or the web might seem cumbersome at first, but this simplicity can also be beneficial (i.e. if you work mostly in the terminal anyway) and it lets the Aider development community focus solely on the AI aspect and not on maintaining countless editor plugins.

Additionally, there are already third-party editor plugins being developed by the community. It is also exciting to follow along with the development process, as new updates with new features and fixes are usually published every week.

While the initial setup is trivial, it pays off to learn more about the editing format that Aider uses to communicate changes with the LLM, and to pay attention to how many files are currently used in the context of the LLM. These two factors have a great impact on how many tokens the input and output context consume, and in turn, how much the currently selected LLM costs to use.

Using Aider for software code generation

Overall, Aider is a very solid choice if you’re looking for LLM-based code generation. It is easy to use, offers powerful features, and can be configured to suit your needs. And the option for voice coding rocks 🦾 The only real drawback is that you have to keep an eye on the costs.

Aider’s results look promising (see Aider’s ranks on the SWE Benchmark above), making it a top contender for a genuinely useful AI tool for software development. Naturally, as with all things AI-related, your experience may vary depending on your codebase, programming language, and the complexity of your application.

Generating testing code with Symflower

If you’re using LLMs in your software development workflow and are looking to reduce the effort costs of testing your projects, Symflower is another useful tool. Its functionality lets you generate smart test templates and complete test suites, and the tool provides test-backed diagnostics based on the generated tests. Symflower’s team can also help you find the right LLMs for your project, apply static code repair for an average +26% increase in functional scores, apply RAG and fine-tuning to improve results, and make LLMs work faster with an average -29% reduction in test execution times.

Here’s an example of working with Symflower:

Have a project you could use help with? Get in touch with us.